Results of the HCI-UX survey 2014

A while back (September 2014) I ran a very short 5-minute survey targeted at UX professionals to elicit thoughts on the links between their work and that of academic HCI research.

The survey was framed in the following way: "This survey is part of a research project exploring in what ways academic human-computer interaction (HCI) research can be made relevant to UX / IxD professionals. The goal of the research is to develop bridges between HCI research and UX / IxD practice."

The complete list of questions is also available.

- The respondents seem to be a mixed bunch. Although the survey was targeted at practitioners of UX / IxD, there was some representation from academic researchers as well.

- Some broad totals:

- 209 surveys started

- 138 were completed to some substantive degree (results below drawn from this subset of data)

- 87 of these respondents seemed to be discernibly involved in 'practitioner work' to some degree

The following sections first present textual responses to the survey, drawn from a handful of questions where it was possible either to elaborate or perhaps disagree with the survey itself. The second section presents numerical data gathered from the rest of the questions posed in the survey. For the moment I have left all this pretty much unanalysed. You can draw your own conclusions, and I would like to hear more about them.

Topics raised in the survey

Textual responses in the survey were generated as a result of the following questions (more details here):

- General catch-all "any comments about the survey" text box.

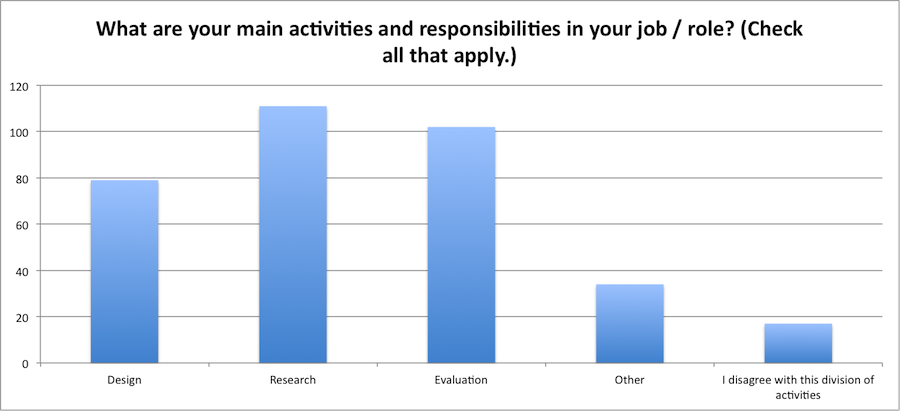

- A question about the "main activities and responsibilities in your job role?" with the options being design, research and evaluation, for which there was an optional text box for "I disagree with this division of activities".

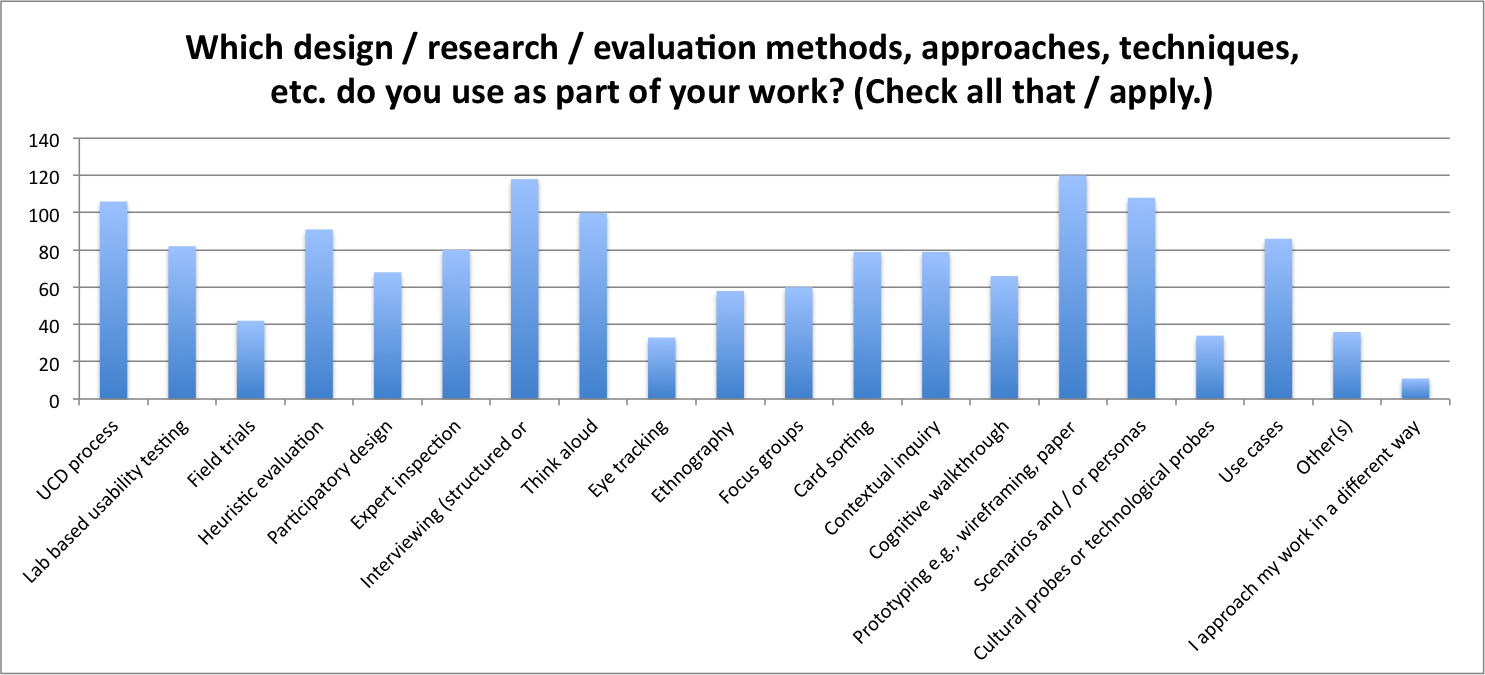

- List of design / research / evaluation methods that the respondent employs with an optional text box for "I approach my work in a different way not described by the options above".

In the selected quotations I present here, I have included some context—where possible—about the self-reported role of the respondent (in square brackets after the quotation). Quotations have also been edited for brevity and basic typos / spelling errors have been corrected. I have included my own comments on the responses where relevant, but put these in italics to ensure clarity between respondent comments and my own thoughts.

On the survey itself

The survey was set up in ways that introduced a distinction between UX and HCI, and between academia and industry. There were no respondents who indicated a negative view of this purported gap (which obviously could be affected by the population attracted to responding to the survey), and indeed even the wording of survey itself did the job of eliciting recognition of this gap. For instance, as one person stated: "As a practitioner, I can tell the survey was made by an academic" [UX consultant]. There were also questions about using a survey as a method of discovery for this research: "I don't see how this survey addresses the question you're attempting to answer" [UX professional].

On the topic of the survey

The majority of responses focussed on the topic of the survey—i.e., unpicking the links between academic HCI and UX in industry. These comments were generally made in the "any comments about the survey" text box. They are presented here in no particular order, but many of them highlight some key issues: HCI as out of touch with UX, communication problems, and problems in the relative speed at which both proceed.

- "there are similar tensions between academia and industry in accessibility (i.e. UX for people with disabilities)" [specialist accessibility consultant inc. UX]

- "There are very few people who have time and resources to dig through the research, digest, test in real-world scenario and disseminate the conclusions to wider community in a approachable way (see Steve Krug). Wouldn't it be great to have more resources like this?" [front-end interface development support; guidelines, templates, etc.]

- "One of the challenges of HCI in academia is it doesn't move as fast as industry and is often wildly out of date. Look at CHI—there are only academics attending yet it thinks its an industry conference, compared with IXDA or UXPA. The Academic community of HCI is about doing the right things to achieve tenure (nothing wrong in doing the right thing for promotion and getting the job you want) but the demands of industry are different." [ex-UX director; independent consultant]. Here I feel that the sentiment is correct regarding the logic at play in academic conferences like CHI—which does think it is an industry conference in terms of appealing to 'the practitioners', and yet does represent a way of academics achieving all-important peer esteem and currency in the HCI community. However, I'd disagree with the point about HCI looking "wildly out of date" in some senses. I guess it depends what viewpoint one uses; maybe it is "wildly out of date" if one looks at it as a joint academia-industry conference.

- "[...the web] gave an enormous boost to UX design. Seen from the other side [academic HCI], it looks like basic ergonomics and expertise of how to deal with human capabilities and constraints, is completely overtaken by commercial aspects of the design." [human factors consultant]

- "1. Seems HCI research doesn't really understand what is like to be in the trenches in terms of how it works nor the rhythm, in fact they come off as above it as if real design work is banal; don't know what the real problems designers are dealing with. 2. Product development people don't have time to search & decode other people's thoughts in hope they will be relevant. A bridge would need to bring relevant information to them, in their own language." [UX manager]

- "I read a lot of research papers. While interesting, I haven't found a lot that is directly applicable to me as a Practitioner." [usability analyst]

-

"What academics don't understand is that (1) UX is HCI—just a more updated term—if anything, HCI and IxD are specific areas within UX, (2) the term HCI is over 25 years old and it conveys to practitioners that academics are out of touch, limiting in how the actual work happens & what today's concerns are in the discipline. (3) [... academic researchers don't know about] the actual issues that are important and relevant to UX practitioner work—they aren't up to date on what problems UX tries to solve, how it is interdependent with other skillsets (Project Mgt, Agile, Software Dev, BA, BPM, ITSM, etc.)

[...]

the nature of academia does not prepare academics to understand the interactions and real-world ways that "UX" work gets done. [...] most academic research & textbooks related to HCI is out of date at best. Students in universities would get more out of reading a few practitioner books than almost all of the textbooks available [...] most HCI researchers don't understand or know how to evaluate/assess how UX work is done & the necessary inter-dependencies between UX practitioners themselves as well as other professionals (project managers, software developers, database designers, backend programmers, business analysis, BPM, etc) that also create the UX.

[...]

HCI researchers tend to see themselves as experts in HCI/UX—when they really aren't. And until they at least understand how the problems or solutions they want to study fit into the whole UX "process", their research results will not be very helpful or practically significant" [UX role including strategy]

Linked to these comments were responses made to "I approach my work differently", which tended to focus on making points about the nature of UX practices and the way a discrete list of methods that was presented to respondents.

- "Approach and tools/techniques used are not necessarily synonymous. I define the approach then look for the appropriate technique." [UX for digital agency]

- "Method inventories are not necessarily the best way to represent the way senior practitioners work. They appear to be discrete activities when in fact they are tools that are often used in combination in a variety of circumstances. There are also techniques from outside the HCI/UX/etc. disciplines that are very helpful in certain situations." [User-Centred Design and Information Architecture]

- "I have to do UI and UX at the same time" [UX instructor]

- "I would employ any of those techniques if they were appropriate to the client brief and research questions." [no description]

On roles and language

Various questions elicited responses that offered problematisations of the way roles and terminology was presented in the survey. Many of these help unpack problems experienced by UX-ers in defining their roles, and some of the challenges of language when communicating across disciplines.

- There is a lack of clear definition to many roles both in HCI and UX: "Given the variety of UX/HCI/IxD positions, a question asking participants to explain/clarify their role would help provide context to their background" [UX and quality assurance]

- One respondent suggested that 'research' was itself a problematic term and to "continue unpacking [its] operational definition" [generalist practitioner: IxD and research]

On the topic of selecting design, research and evaluation as indicators of what respondents did in their work, a number of comments highlighted the problematics of such terms and (again) language issues that might exist in talking across HCI and UX.

- "All three words mean very different things to different people. User research for product design is called research, but it is not academic HCI research. Similarly, design means loads of different things and no-one quite knows what evaluation means." [UX director]

- "These three are good but I'd expect to see an architecture role within an interest in value proposition that the design should bring to the organisation. Most organisations I have worked with consider information architecture, interaction design and visual design to be distinct disciplines. Occasionally, copywriting is considered to be a UX discipline." [no description]

- "In my region [US] evaluation and research are often lumped together in professional environments" [no description]

- "I assume that "Research" means MSR-type research not connected to a product being built. 'Evaluation' does not adequately describe everything that design researchers do because it doesn't capture the generative front-end ethnographic work that we do." [Design researcher] I interpret "MSR-type research" as a style of corporate research labs where the conduct is more strongly 'academic' and 'basic research' than strongly product-driven.

- "Design includes user task analysis en evaluation throughout the design process." [human factors consultant]

- "I don't see a separation between evaluation and research. Many methodologies (such as user testing) are both research and design evaluation." [UX contractor]

- "I really dislike the division between research and design. To me it's all part of design." [design strategist]

- "They are too vague, esp. 'research' and 'design'. Academics define 'research' totally different than practitioners do, causing confusion." [Independent UX]

On survey options

Survey options themselves caused problems. Here are some examples:

- The options "concentrate[d] on having done/heard about something rather than how frequently or important the options are [...] I have been published in a HCI journal but I can't claim to read HCI research on a monthly basis" [UX role in digital agency]

- Scope of what was considered to be 'in' HCI and 'out' also created concerns: "I wondered if I published research-based best practices as books and papers on usability, does that count for you as HCI publications, or are you positing that there is a great divide and that HCI research is necessarily confined to the academy and its journals?" [Information Architecture role]

- Reporting the frequency of method application was not well-supported by survey options: "[a] simple yes/no of what I do is not fine-tuned enough. HOW OFTEN each is done is a lot better question to ask" [Independent UX role].

- The survey also didn't reflect differences in how UX might be embedded into wider organisations: "The UX maturity level of the org where I work is not consistent with my background. The survey does not catch that aspect." [UX in software development team]

Numerical results

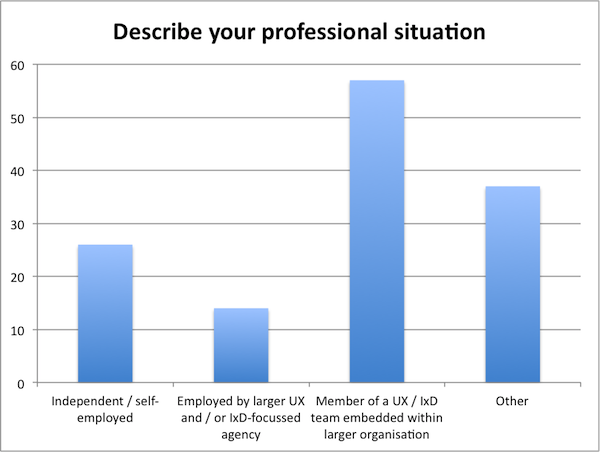

The self-reported makeup of the survey was as follows:

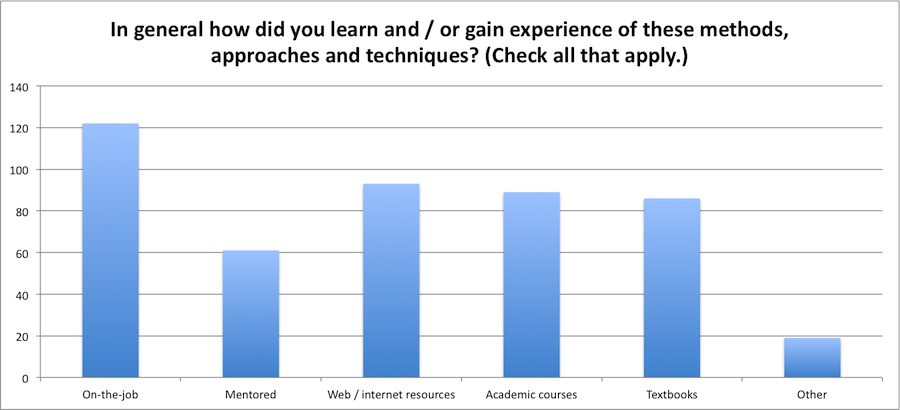

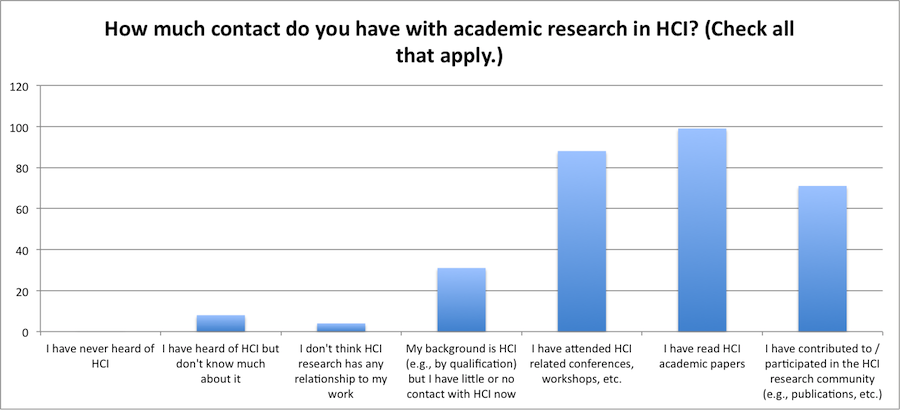

The following charts have been generated from the other (numerical) survey questions: