Teleoperation, Autonomous and Human-in-the-Loop Manipulation

|

The ability of robots to fully autonomously handle dense clutters or a heap of unknown objects has been very limited due to challenges in scene understanding, grasping, and decision making. In addition to the development of autonomous grasping and complex non-prehensile/prehensile manipulation techniques, we conduct research on semi-autonomous approaches where a human operator can interact with the system (e.g. using tele-operation but not only) and giving high-level commands to complement the autonomous skill execution.

Our research investigates paradigms to adapt the amount of autonomy of robotic systems to the complexity of the situation and the skills and states of the interacting or nearby humans. Building on our semi-autonomous control framework in our lab, our research looks at building a manipulation skill learning system that learns from demonstrations and corrections of the human operator and can therefore learn complex manipulations in a data-efficient manner. Research topics

|

Related grants:

HEAP Human Guided Learning and Benchmarking of Robotic Heap Sorting

HEAP is a research project funded by Chist-Era and EPSRC (EP/S033718/2) that investigates Robot Manipulation Algorithms for Robotic Heap Sorting. This project will provide scientific advancements for benchmarking, object recognition, manipulation and human-robot interaction. We focus on sorting a complex, unstructured heap of unknown objects –resembling nuclear waste consisting of a set of broken deformed bodies– as an instance of an extremely complex manipulation task. The consortium aims at building an end-to-end benchmarking framework, which includes rigorous scientific methodology and experimental tools for application in realistic scenarios.

Key Publications

HEAP Human Guided Learning and Benchmarking of Robotic Heap Sorting

HEAP is a research project funded by Chist-Era and EPSRC (EP/S033718/2) that investigates Robot Manipulation Algorithms for Robotic Heap Sorting. This project will provide scientific advancements for benchmarking, object recognition, manipulation and human-robot interaction. We focus on sorting a complex, unstructured heap of unknown objects –resembling nuclear waste consisting of a set of broken deformed bodies– as an instance of an extremely complex manipulation task. The consortium aims at building an end-to-end benchmarking framework, which includes rigorous scientific methodology and experimental tools for application in realistic scenarios.

Key Publications

- Ly, K. T., Poozhiyil, M., Pandya, H., Neumann, G., and Kucukyilmaz, A. (2021, August). Intent-Aware Predictive Haptic Guidance and its Application to Shared Control Teleoperation. In 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) (pp. 565-572). IEEE. https://ieeexplore.ieee.org/abstract/document/9515326

- Singh, J., Srinivasan, A. R., Neumann, G., & Kucukyilmaz, A. (2020). Haptic-guided teleoperation of a 7-dof collaborative robot arm with an identical twin master. IEEE transactions on haptics, 13(1), 246-252. https://ieeexplore.ieee.org/abstract/document/8979376

- Serhan, B., Pandya, H., Kucukyilmaz, A. and Neumann, G., (2022, May). Push-to-See: Learning Non-Prehensile Manipulation to Enhance Instance Segmentation via Deep Q-Learning. In 2022 International Conference on Robotics and Automation (ICRA) (pp. 1513-1519). IEEE doi: 10.1109/ICRA46639.2022.9811645. https://ieeexplore.ieee.org/document/9811645

Personalized Wheelchair Assistance

|

An emerging research problem in the field of assistive robotics is the design of methodologies that allow robots to provide human-like assistance to the users. In our research, we try to estimate assistance policies to present better assistance to users over haptic shared control.

In our previous research, we have built an intelligent robotic wheelchair, which learns from human assistance demonstrations while the user is actively driving the wheelchair in an unconstrained environment. The results indicate that the model can estimate human assistance after only a single demonstration, i.e. in one-shot, so that the robot can help the user by selecting the appropriate assistance in a human-like fashion. Key Publications

|

Related grants:

Learning Assistance by Demonstration in Triadic Interaction for Intelligent Wheelchair Systems

Source: Scientific and Technological Research Council of Turkey (TUBITAK) 2219- International Postdoctoral Scholarship Programme

Role: Principal Investigator

Duration: 2014-2015

Budget: 22800 Euros

Learning Assistance by Demonstration in Triadic Interaction for Intelligent Wheelchair Systems

Source: Scientific and Technological Research Council of Turkey (TUBITAK) 2219- International Postdoctoral Scholarship Programme

Role: Principal Investigator

Duration: 2014-2015

Budget: 22800 Euros

Physical Human-Robot Interaction and Human-Autonomy Teamwork

|

We are interested in building haptics enabled systems to interact with robots in dynamic physical tasks. Previous work was conducted as part of the Robotics and Mechatronics Laboratory of Koc University in collaboration with the Chair for Information-oriented Control (S. Hirche), Technische Universitaet Muenchen. This study proposed a dynamic role exchange scheme in a collaborative joint object manipulation scenario with a man-sized mobile robot.

Key Publications

Alexander Moertl, Martin Lawitzky, Ayse Kucukyilmaz, Tevfik Metin Sezgin, Cagatay Basdogan, and Sandra Hirche. The Role of Roles: Physical Cooperation between Humans and Robots. The International Journal of Robotics Research, vol. 31, no.13, pp.1656-1674 (2012). See supplementary material: video |

Physical Human-Human Interaction

|

In order to identify human interaction patterns, we have developed an application where two human subjects interact in a virtual environment through the haptic channel using paired haptic devices.

Current research is focused on designing an online behavior classification technique for understanding the interaction between two humans. Key Publications

Cigil Ece Madan, Ayse Kucukyilmaz, Tevfik Metin Sezgin, and Cagatay Basdogan. Recognition of Haptic Interaction Patterns in Dyadic Joint Object Manipulation. IEEE Transactions on Haptics, vol.8, no.1, pp.54-66, (2015). |

Related grants:

Source: EC-FP7 Marie Curie Actions-People CO-FUNDED Co-Funded Brain Circulation Scheme (Co-Circulation)

Title: Online Recognition of Interaction Behaviors in Dyadic Collaboration

Role: Principle Investigator

Duration: May 2015-May 2016

Budget: 71.900 Euros

Source: EC-FP7 Marie Curie Actions-People CO-FUNDED Co-Funded Brain Circulation Scheme (Co-Circulation)

Title: Online Recognition of Interaction Behaviors in Dyadic Collaboration

Role: Principle Investigator

Duration: May 2015-May 2016

Budget: 71.900 Euros

Haptic Negotiation and Intent Recognition

|

Haptic negotiation involves a decision making process in which the computer is programmed to infer the intentions of the human operator. Depending on human intentions the controller can adjust the control levels of the interacting parties to facilitate a more intuitive interaction. Our research investigates shared control architectures with haptic negotiation to improve the communication between a human and a computer agent.

Key Publications

Ayse Kucukyilmaz, Tevfik Metin Sezgin, and Cagatay Basdogan. Intention Recognition for Dynamic Role Exchange in Haptic Collaboration. IEEE Transactions on Haptics, vol. 6, no. 1, pp. 58-68 (2013). |

Behavioral Multimodal Agents

|

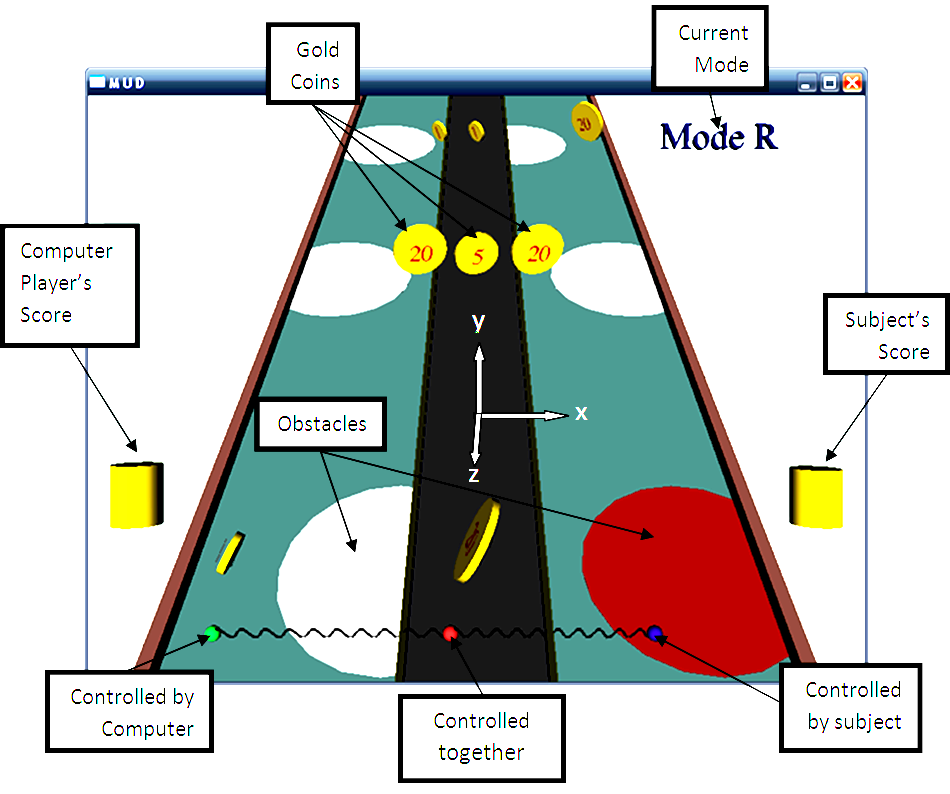

We investigate how human-computer interaction can be enriched by employing the computers with behavioral patterns that are proposed for two-party coordination games as proposed in the game theory literature. We are interested in studying the effectiveness of multimodal sensory cues in conveying negotiation related behaviors.

Key Publications

Salih Ozgur Oguz, Ayse Kucukyilmaz, Tevfik Metin Sezgin, and Cagatay Basdogan. Supporting Negotiation Behavior in Haptics-Enabled Human-Computer Interfaces. IEEE Transactions on Haptics, vol. 5, no.3, pp. 274-284 (2012). |