| Recent Updates: |

| 28. Must-Read: Sebastian Raschka's State of LLMs 2025 |

| Credits: Written by Peer-Olaf Siebers. Copy-editing by Claude. Created: 06/01/2026. |

|

If you are serious about understanding where Generative AI is heading, Sebastian Raschka's latest deep dive into the fast-changing world of LLMs, is essential reading. His comprehensive blog post "The State Of LLMs 2025: Progress, Problems, and Predictions" provides a review of LLM developments in 2025 cuts through the hype and delivers genuine insight into reasoning models and the factors that are actually driving progress. One of the strengths of Raschka's blog post is that he does not just recap the year but also explains why these developments matter and offers honest perspectives on using LLMs for coding, writing, and research. As the established author of several best-selling books on the subject, you can trust that his predictions for 2026 are grounded in real experience, not speculation. Whether you are a researcher, developer, or simply AI-curious, if you want to keep pace with the fast-changing world of LLMs, this is one blog post you cannot afford to miss. It offers clarity in a field drowning in noise. Read the full article here: https://sebastianraschka.com/blog/2025/state-of-llms-2025.html |

| Back to Top |

| 27. Publisher Policies for AI Use in Preparing Academic Manuscripts |

| Credits: Text and background research by Peer-Olaf Siebers. Copy editing by Gemini. Created: 22/11/2025. |

| Introduction |

|

Have you ever worried that using generative AI when drafting an academic manuscript might inadvertently cross an ethical line? While publishers are increasingly supportive of these tools when used transparently and responsibly, there is still uncertainty regarding what "transparent and responsible" actually means. After listening to an online talk on "AI and Scientific Writing" by Niki Scaplehorn, Director of Content Innovation at Springer Nature, I was struck by how open-minded publishers have become regarding the use of generative AI in the publication pipeline. The presentation was an eye-opener; publishers are surprisingly supportive of AI for routine tasks. I had assumed the rules would be far stricter, having subconsciously applied the rules we enforce for students to the context of academic manuscript preparation. I was mistaken, and I suspect many of us share this assumption. |

| The STM Framework |

|

The International Association of Scientific, Technical & Medical Publishers (STM) has established a framework now to help publishers develop coherent policies on AI use in manuscript preparation. This framework classifies the various ways AI can assist authors in this process. Participating publishers include Taylor & Francis, Wiley, Elsevier, IEEE, Cambridge University Press, BMJ Group, and Springer Nature. The complete STM Recommendations are available here. |

| Comparing Publisher Policies |

A review of policies from Elsevier, Springer Nature, and Wiley reveals that while they share the core principles defined in the STM framework, there are subtle nuances in their implementation.

|

| Additional Insights from the Springer Nature Talk |

There are two more points I noted from the Springer Nature talk on "AI and Scientific Writing".

|

| Key Takeaway |

| It is worth checking the specific publisher's guidelines on the use of generative AI and AI tools for your next publication. Doing so could save you significant time and give you confidence when using AI support in your writing process. |

| Publishers' AI Policy Websites |

| Back to Top |

| 26. The Secret of 'conversations.json': How to Read Exported Claude Conversations | ||||||

| Credits - Peer: Post author and director of coding. LLM Team: Post grammar check and coding execution. Created: 10/11/2025. | ||||||

| Motivation | ||||||

|

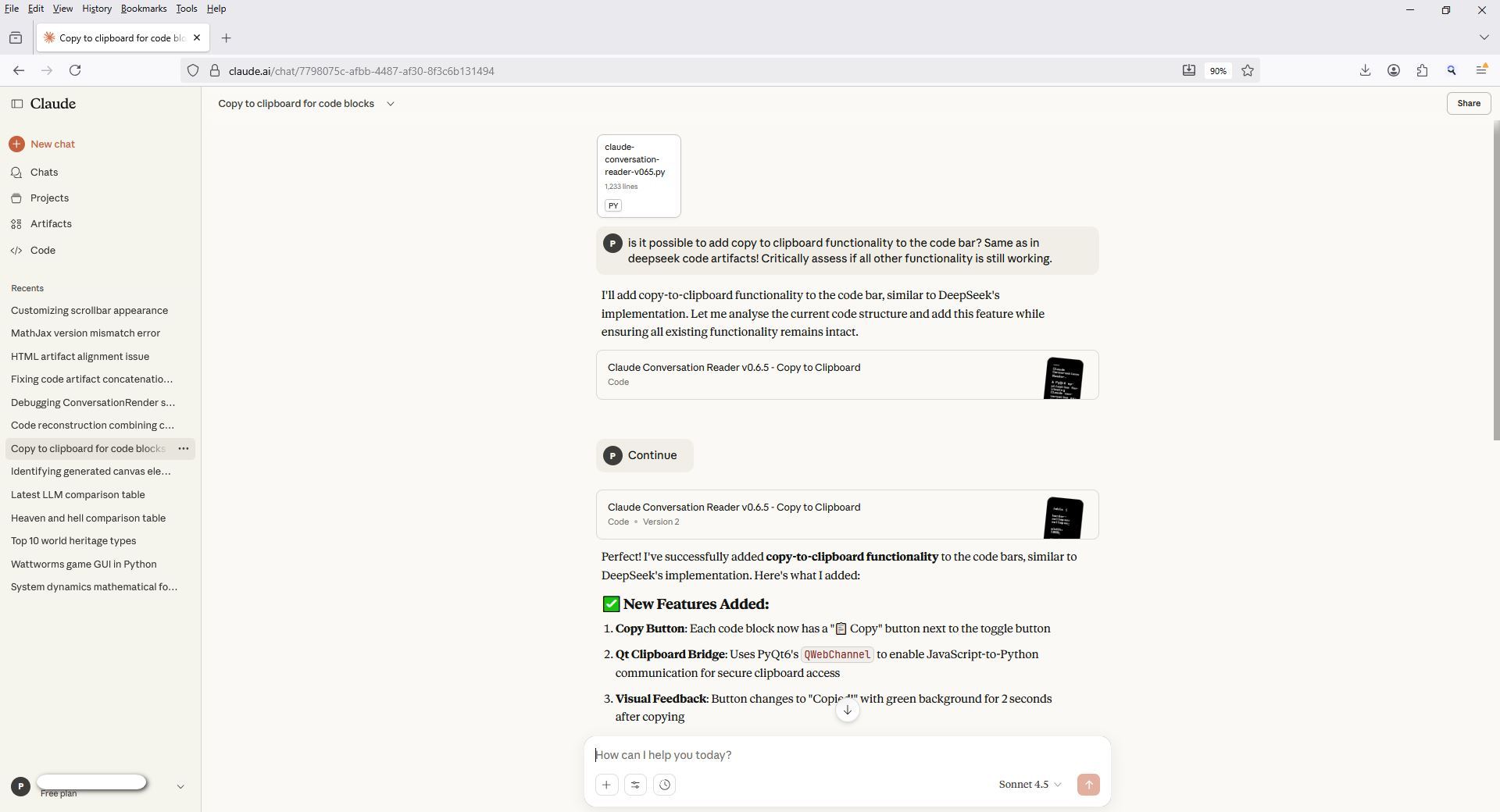

Have you ever wanted to export your Claude conversations for safekeeping, analysis, or simply to read them offline? This question might sound familiar if you have read my previous post "The Secret of 'conversations.json': How to Read Exported ChatGPT Conversations". This time, we are looking at Claude. Below is a screenshot of the maximised Claude web interface. The web interface has several limitations: it doesn't provide conversation creation timestamps in either the conversation history or the conversations themselves. Additionally, when the browser is maximised, the conversation display window doesn't increase its width. Furthermore, when you generate extensive code, you receive multiple links to both incomplete and complete version. | ||||||

| ||||||

| Click image to enlarge | ||||||

| These limitations motivated me to build my own offline Claude conversation reader to address these issues and enhance my experience when reviewing past conversations. | ||||||

| Data Export | ||||||

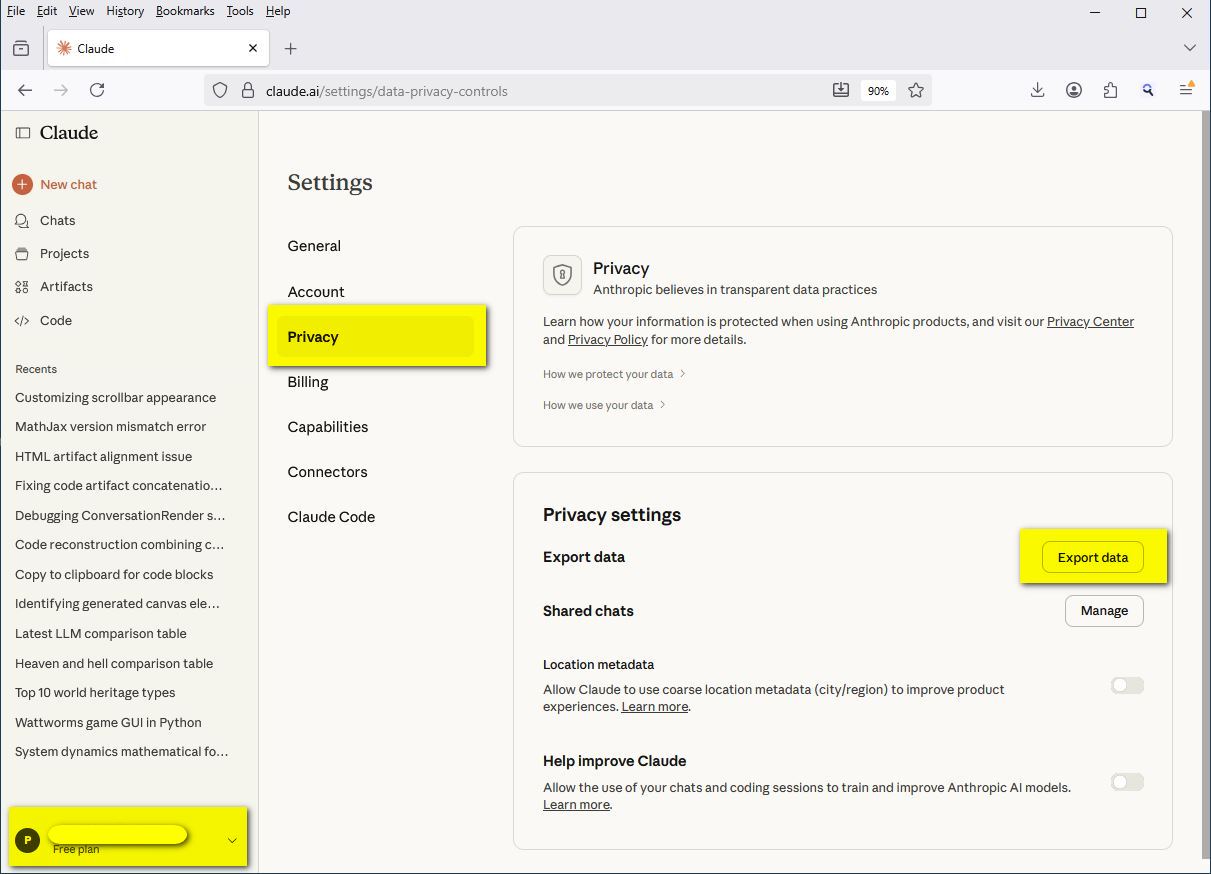

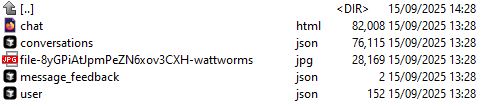

| In Claude, you can export all previous conversations in one go using the export functionality, which provides a direct download link to your stored conversation collection. Click the user profile area in the bottom left of the main browser window, then select Settings, Privacy, and Export data. | ||||||

| ||||||

| Click image to enlarge | ||||||

|

You will receive an email with the download link for your entire collection of Claude conversations. Note that the zip file does not contain the original attachments you submitted with your prompts, only what Claude extracted from them. Once the export is complete and the core information of your chats has arrived in the form of a large JSON file, you might consider tidying up your conversation history. There is an option in the Claude interface, accessible by clicking on "Chats", to delete existing conversations. Using this functionality is entirely your decision, and you do so at your own risk. I am not liable for any data loss. | ||||||

| Ever Wondered What to Do with Your Exported Claude Conversations? | ||||||

|

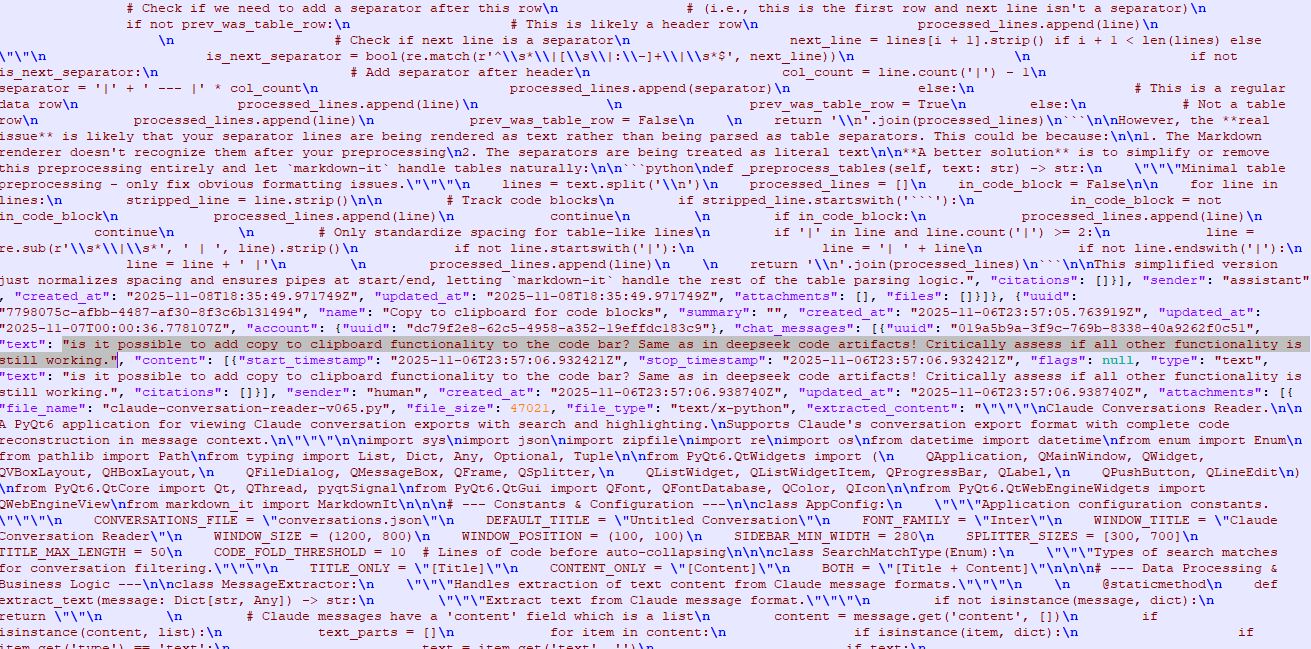

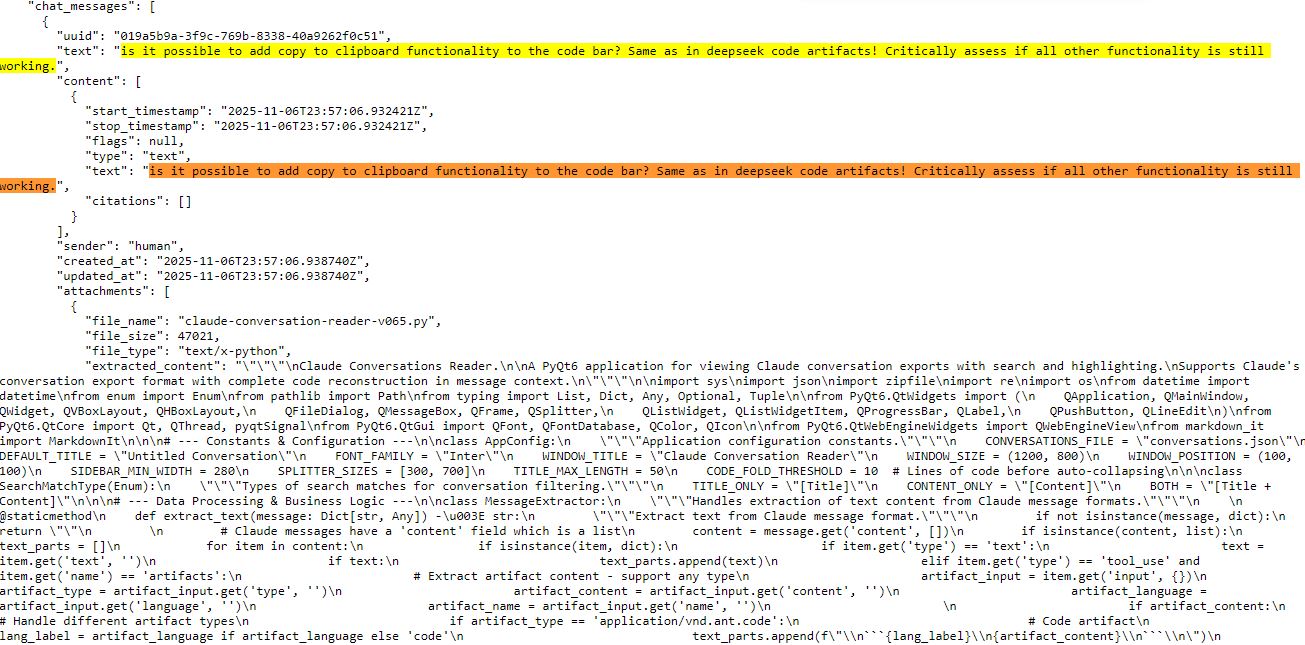

When exporting from Claude, unlike ChatGPT, there is no HTML file included in the zip file for reading your exported conversations online. Instead, you only receive your entire conversation history in the form of a JSON database: conversations.json. If you examine the database, you can find the information related to the conversation highlighted in the first screenshot of this post, but it is not straightforward to read. | ||||||

| ||||||

| Click image to enlarge | ||||||

| Using a Chrome extension to present JSON files in a more readable format doesn't help much either. | ||||||

| ||||||

| Click image to enlarge | ||||||

| The Claude Conversation Reader App | ||||||

|

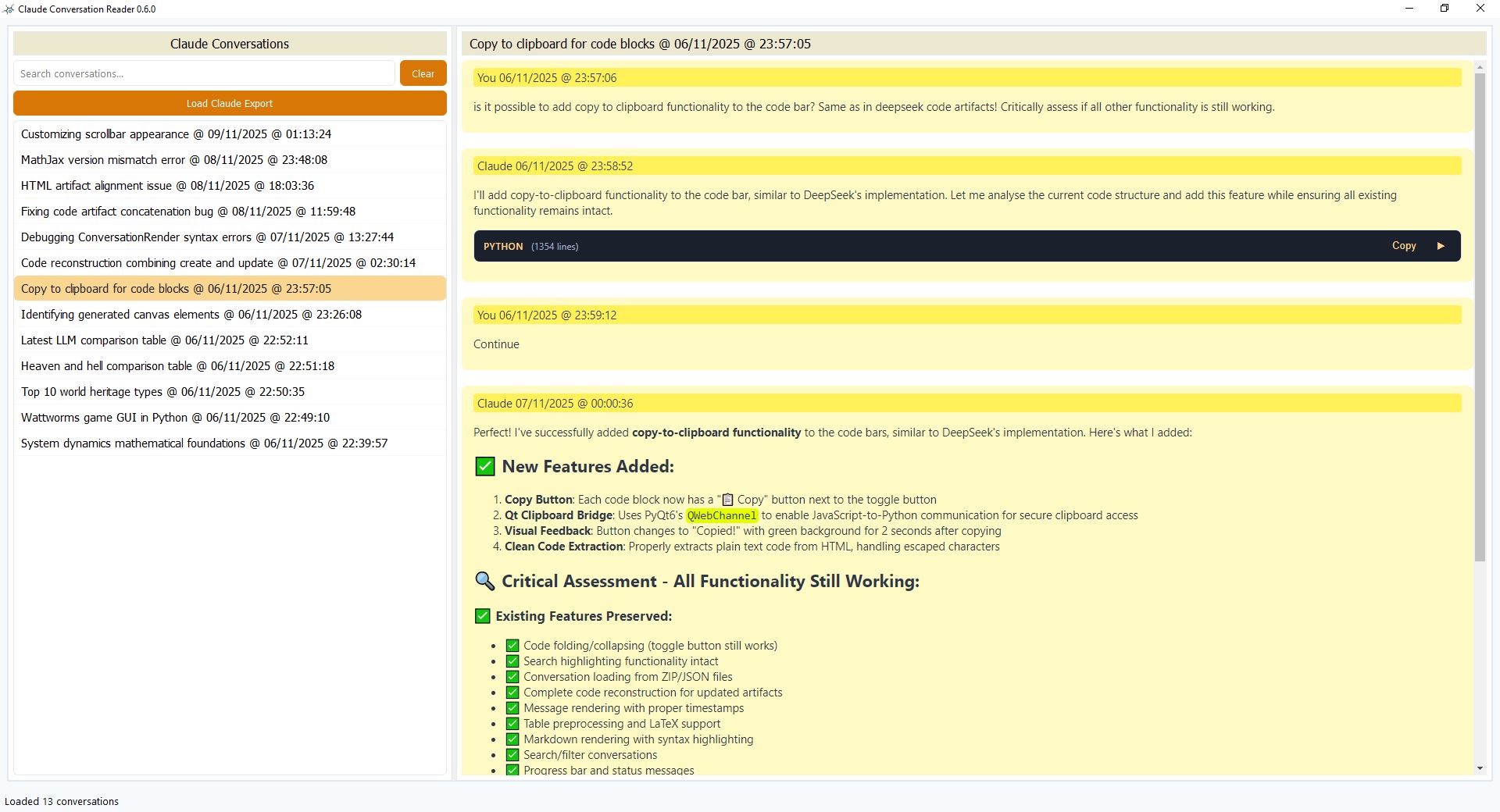

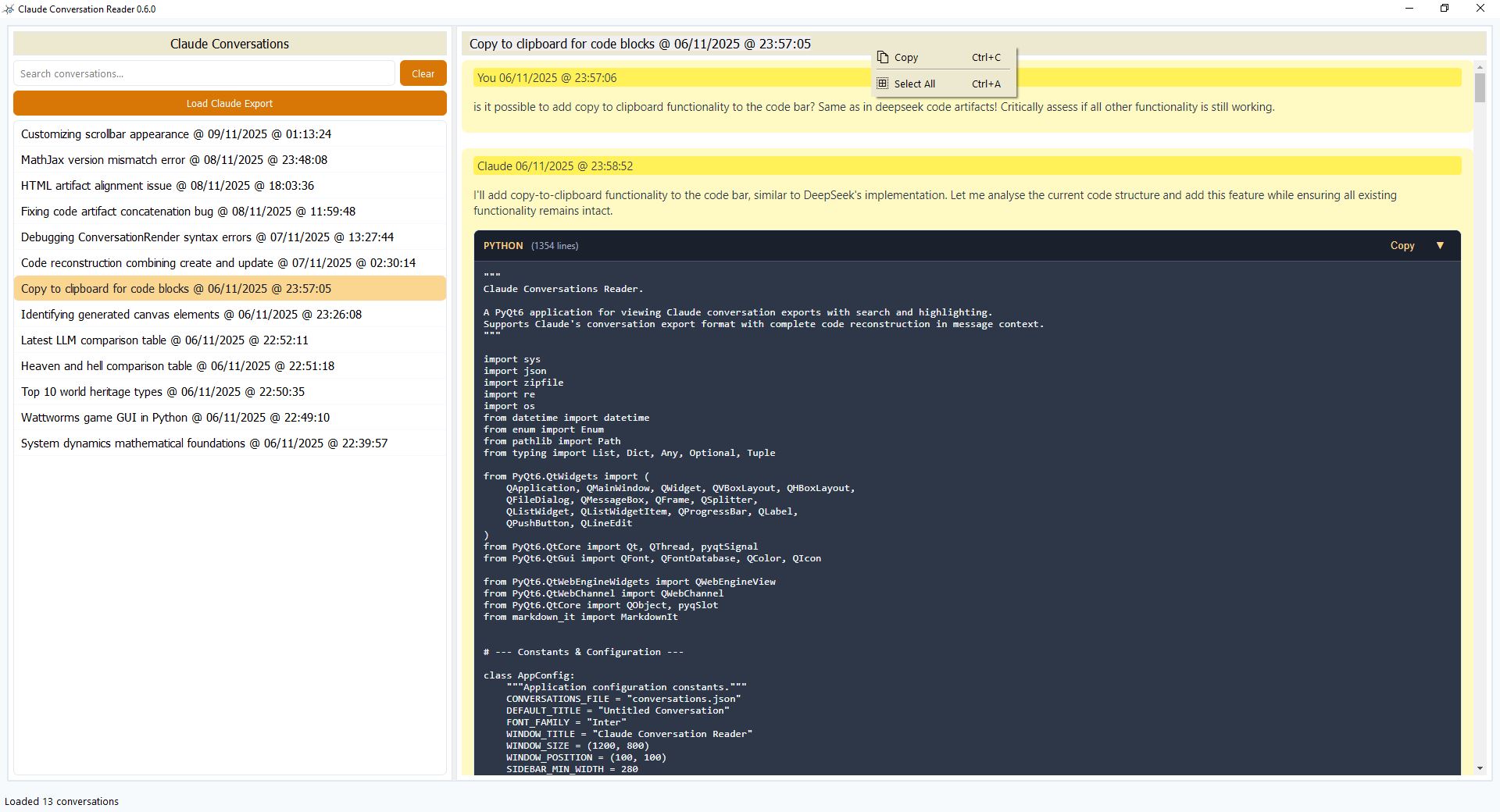

The Claude conversion tool is forked from the ChatGPT conversation tool. However, it required substantial redevelopment to work with Claude. The biggest challenge was extracting information correctly from the JSON file. Claude supports different types of artefacts, mostly representing content generated in the canvas that appears when, for example, code is generated. These artefacts require different rendering and visualisation approaches. Let's look at how the same conversations initially shown in the Claude web browser are displayed in the Claude Conversation Reader. | ||||||

| ||||||

| Click image to enlarge | ||||||

| I am sure you will agree that this looks considerably more inviting and informative than the original Claude web interface. A particularly challenging feature to implement was the code folding and code copying functionality. Code can be copied to the clipboard with a button press and pasted into a text file. Another feature is code folding: if code is longer than five lines, it is folded by default, making the conversation display cleaner and easier to read. When needed, the code can be unfolded. You can also copy the conversation name to the clipboard, something that is not possible in the Claude web interface, which can be very useful when taking notes or extracting content from specific conversations. | ||||||

| ||||||

| Click image to enlarge | ||||||

| However, regarding functionality, we must keep in mind that the current version is a prototype requiring further development to be fully functional and additional testing to improve reliability. The good news is that the application runs without crashing. There are occasional warnings in the terminal, but nothing serious. It makes the information embedded in the conversations.json file accessible to everyone! | ||||||

| How to Move Forward from Here | ||||||

| There are several things I would like to explore when time allows. Perhaps the most important is a careful study of the conversations.json schema used by Claude to ensure all artefacts are identified and displayed correctly. In particular, investigating how user-submitted attachments are stored within the JSON file and making them accessible in the application would be a key milestone. Considering different export options for individual conversations would also be an interesting extension. Finally, adding an option to view the JSON block for each artefact would be a valuable feature. | ||||||

| Conclusion | ||||||

| What I found interesting during this experiment is that human intervention is sometimes still needed to provide the decisive hint when errors occur. The LLMs all failed to make the concatenation of different code blocks work. Once I provided the crucial hint, it worked immediately, as it only required a simple change in an if-then loop. It is good to see that we are still needed :-) | ||||||

Repository:

When you download the executable file, your virus scanner might report that it contains a virus. This is a false positive. However, if you prefer to be cautious, you can run the application using the provided source code instead. To download the source code from GitHub, click the "Code" link above. This will take you to the correct section of the GitHub repository. In the window that opens, click the downward-facing arrow in the top-right corner to download the zip folder containing the source code and instructions for running it. The folder will be saved to your default download location.

|  | | Back to Top | | |

| 25. Surprise Me Image Generator: Your Creative AI Companion | ||||||

| Credits: Written by Peer-Olaf Siebers. Polished by Claude Sonnet 4.5. Created: 05/10/2025. | ||||||

|

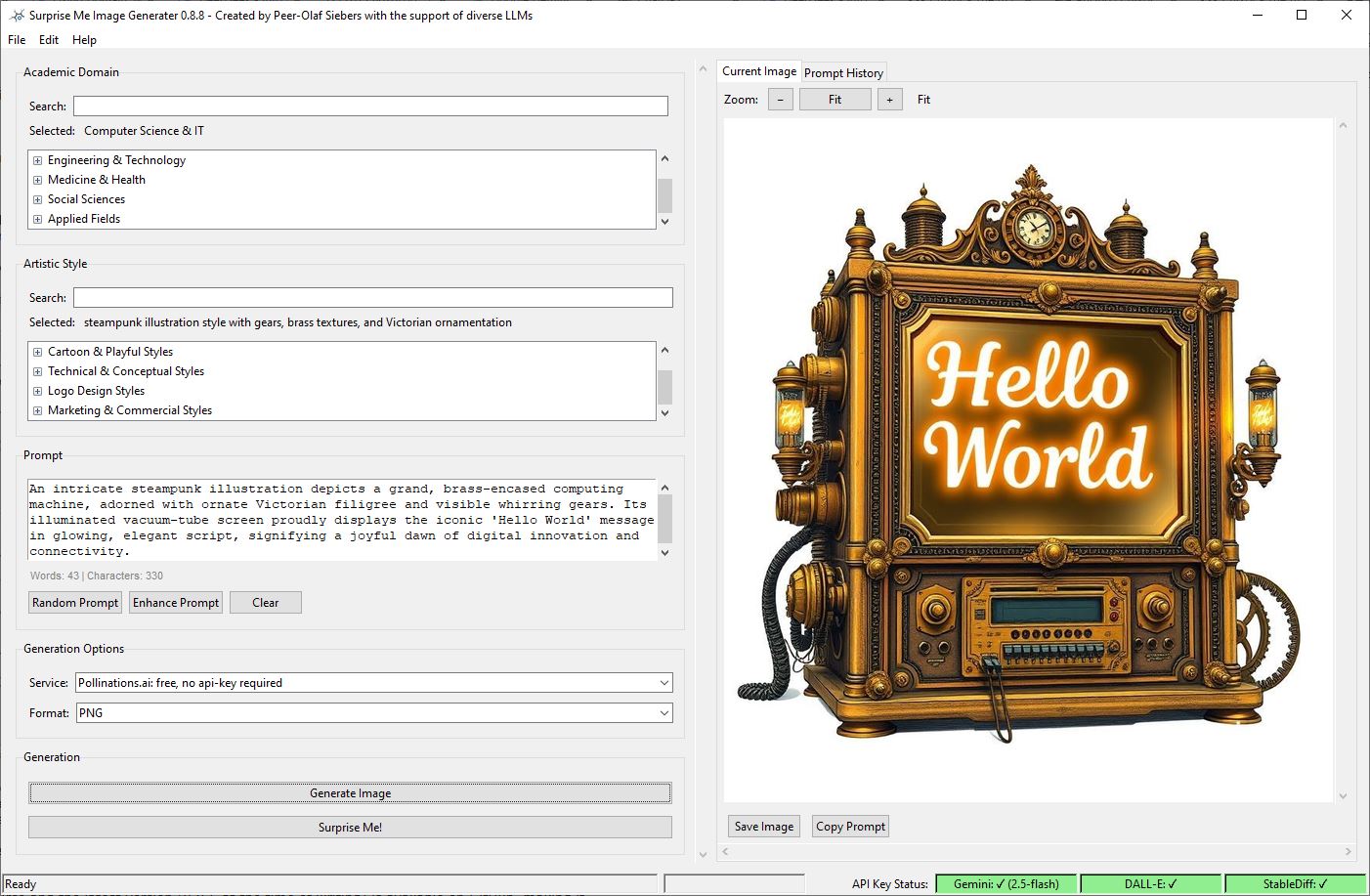

Ever stared at a blank canvas of AI image prompts, wondering what to create next? I have been there. That's why I am excited to share a tool that transforms the sometimes-daunting process of AI image generation into pure creative joy. Surprise Me Image Generator is a Python-based desktop application that bridges the gap between inspiration and creation. At its heart, it combines Google's Gemini AI for prompt generation with three popular image generation services: Pollinations.ai, DALL-E 3, and Stable Diffusion XL. What makes this tool special is its thoughtful approach to creative exploration. You can select from dozens of academic domains, from marine sciences to philosophy, and pair them with artistic styles ranging from watercolour paintings to glitch art. The application then generates contextually rich prompts that blend your chosen domain with stylistic direction, creating images you might never have thought to request. The interface is refreshingly straightforward. Left panel for controls, right panel for your generated masterpiece. Want to explore randomly? Hit "Surprise Me!" and watch as the tool selects a random domain and style, generates a prompt, and creates an image, all with one click. Prefer more control? Generate random prompts, enhance your own text, or manually craft everything yourself. | ||||||

| ||||||

| Original Prompt: 'hello world' | Enhanced via 'Enhance Prompt', using chosen Domain and Style | ||||||

|

What I particularly appreciate is its flexibility with API requirements. Pollinations.ai works immediately without any configuration, whilst Gemini, DALL-E, and Stable Diffusion are optional enhancements you can enable as needed. The status bar clearly shows which services are available, removing any guesswork. Whether you're an artist seeking inspiration, a designer exploring concepts, or simply someone who enjoys beautiful AI-generated imagery, this tool offers a structured yet playful approach to creative exploration. The project is open-source and the latest version is available on GitHub, making it accessible to anyone interested in experimenting with AI-assisted creativity. For those who prefer not to set up a Python environment, a compiled executable is also available that runs immediately without installation. | ||||||

Repository:

When you download the executable file, your virus scanner might report that it contains a virus. This is a false positive. However, if you prefer to be cautious, you can run the application using the provided source code instead. To download the source code from GitHub, click the "Code" link above. This will take you to the correct section of the GitHub repository. In the window that opens, click the downward-facing arrow in the top-right corner to download the zip folder containing the source code and instructions for running it. The folder will be saved to your default download location.

|  | | Back to Top | | |

| 24. The Making of ... "Surprise Me Image Generator" |

| Credits: Written by Peer-Olaf Siebers. Polished by Claude Sonnet 4.5. Created: 04/10/2025. |

| Learning to Build with LLMs: Lessons from a Week-Long Project |

| The other day I was searching for an innovative way to generate prompts for image generation, something that would allow more control over the resulting output. What started as a simple experiment turned into a week-long journey that taught me valuable lessons about building software with LLM assistance. In this post, I'll share the development pathway from experimental prototype to deployable application. My next blog post will focus on the application itself and its features. |

| How the Project Evolved |

| I started this project primarily to learn more about API usage and image generation. There was no grand plan, the application evolved organically from a simple command-line testing tool into a modern application with a professional GUI and high-quality outputs. Without rigid technical requirements, I took an iterative prototyping approach: get the bare-bone foundations working, then progressively improve robustness whilst adding new features step by step. The project served as a testbed for interesting ideas before eventually reaching production-quality code. All of this happened over about a week of evening sessions. |

| The Joy (and Pitfalls) of LLM-Assisted Development |

|

Working on small projects like this offers tremendous learning opportunities, even when you delegate most coding to an LLM. It's genuinely exciting to see your prototypes actually work, especially when you can bypass many of the technical difficulties that would have stopped you in the past. Reading the LLM's comments, particularly the step-by-step explanations it adds to code when you request improvements, provides valuable insight into how things work. You'll also gain practical understanding by making mistakes that first-year undergraduates wouldn't dream of making in their coursework. Here's my confession: I didn't use version control. Instead, I ended up with a chaotic mess of files named with random suffixes (a, b, c... z), sometimes losing track of small but important fixes. This mistake cost me hours of tedious manual comparison between versions. The problem compounded when I manually fixed bugs, then asked the LLM for improvements. The LLM would suggest replacement methods that didn't include my manual fixes, because it had no way of knowing about changes I hadn't told it about. Comparing versions manually to recover these fixes is exhausting work. The lesson is clear: use version control from day one. It's not optional. For my next project, Git is non-negotiable. |

| Strategies for Working Effectively with LLMs |

|

If you encounter code size limitations (Claude has limits on how many lines it can write in one go), here's an effective strategy: ask the LLM to write a first prototype, then request only the code snippets that need fixing. As you search your codebase to find where each snippet belongs, you'll naturally learn about the code structure and how components connect. When you don't understand something, ask the LLM to explain, but always add a word limit, or you'll spend all night reading explanations. Another valuable technique is requesting refactoring when you spot redundancy. LLMs generate code sequentially, so they rarely reuse code unless you explicitly request it. Simply saying "refactor" isn't particularly effective, you need to be specific. In my application, I had several different prompt design methods, each with its own template that looked remarkably similar. When I pointed this out and asked for a streamlined prompt template framework whilst preserving functionality and readability, the LLM reduced that feature's code by 50%. The reduction also made everything easier to understand. The key insight: even when letting an LLM build your code, apply your own software engineering skills to overcome the LLM's weaknesses. Whilst I'm not a Python expert (the language I chose for this application), my background in software engineering and maintenance proved invaluable for guiding the LLM towards better code quality. |

| Leveraging the LLM for Debugging and Traceability |

| A critical strategy I developed involves enlisting the LLM as a debugging partner. When faced with a complex bug, instead of just describing the symptom, I would prompt: "To solve this issue, what kind of debug information do you need?" The LLM would then suggest specific variables to log or state to check. I then tasked it with implementing a debug framework that prints this required information to the console, controllable by a command-line argument or a flag in __main__. This transforms debugging from a guessing game into a structured, data-driven process. |

| The Dangers of Disappearing Functionality |

|

A particularly insidious class of bugs emerges from the LLM-assisted workflow: disappearing functionality. When you frequently copy/paste methods, it's possible for features to be silently omitted or overwritten. You cannot trust that what worked at one point still works as intended. In my case, a refactoring of the prompt assembly silently stopped considering the domain and style parameters. Because the LLM's output is stochastic, such regressions can be difficult to detect, as the program still runs but produces subtly degraded results. The mitigation is twofold. First, carefully check that all functionality is preserved after any significant change. Second, once an error is detected, write tests for the inputs to the faulty component. In my case, I wrote tests to verify the components passed to the prompt generator, rather than testing the generator's complex output. This isolates the failure to a specific data flow, making the root cause easier to identify. |

| The Criticality of Code Structure and Indentation |

|

Many runtime errors in Python, such as "NameError: name 'X' is not defined," are often simple structural issues. A common cause is incorrect indentation after copy/pasting a method, where the method header or body is not aligned correctly. This is a fundamental error, but it's easily made when rapidly integrating code from an LLM. The solution is is deliberate verification: when copying and pasting, double-check that the entire block is correctly indented. Another issue occurred when copying methods from a *.txt file to a *.py file. I indented the methods using the Tab key in the *.txt file, which my editor (NotePad++) would normally convert to four spaces in *.py files. However, when I pasted these methods into the *.py file and ran the programme, I got "TabError: inconsistent use of tabs and spaces in indentation". The problem was that the code contained a mix of actual tab characters and spaces, which looked identical on screen but caused Python to fail. This is difficult to spot visually and can waste considerable debugging time, as the code appears correctly aligned whilst still producing errors. |

| The Underestimated Challenge: Error Handling |

| What I hadn't realised beforehand is that error prevention and error handling are the most difficult aspects of this kind of development. Robust code requires fallback solutions, a comprehensive error-handling framework, and error/warning pop-ups that provide understandable explanations rather than cryptic console logs. The time I invested in developing proper error handling was well spent, I now have a robust application that handles most errors gracefully. Through examining the LLM's explanations of issues and proposed solutions, I learned enormously about error handling. Eventually, I felt confident enough to make my own decisions when the LLM offered multiple solutions on the topic. |

| Communication Skills Matter |

|

You'll learn a great deal from discussing your requirements with an LLM. You need to explain things clearly enough to get the results you want, which sometimes reveals weaknesses in your own communication. Since this challenge exists when explaining things to humans too, you're developing a skill valuable far beyond software development. I also learned that it's sometimes better to reject overly complex solutions when a simpler approach would suffice. One more tip: Start fresh conversations periodically when using online LLM frontends like ChatGPT. Upload your complete, up-to-date code as the basis for each new conversation. Otherwise, you'll experience error propagation, the LLM doesn't know which advice you followed or which options you chose unless you explicitly inform it. |

| Why Software Engineering Skills Still Matter |

|

Let me address a question I frequently hear from students: "Why do we still need to learn software engineering when AI can produce apps so much faster through 'vibe coding'?" The answer is straightforward: someone must orchestrate the AI, and that requires understanding software engineering principles. Without that knowledge, I would have ended up with a flawed, bloated codebase that broke whenever I made small changes. The "vibe coding" trend on platforms like YouTube looks fast and fun, but notice that the instructors are almost always experienced software developers who also teach traditional software engineering courses. That's not an accident. Whilst LLMs can provide numerous ideas and rapid prototyping code, translating these into a high-quality product depends entirely on the software engineer's skillset. Even with tools like Cursor that help improve code quality, it's still down to the software engineer to formulate effective prompts, which requires solid understanding of software development principles and practices. |

| Conclusion |

|

This project demonstrated to me that AI can be a powerful collaborator for rapid prototyping, but it's no substitute for software craftsmanship. The best results emerge when you combine both approaches: let the AI speed up the tedious parts, but apply your engineering knowledge to ensure quality, structure, and robustness. In my next post, I'll dive into the application itself, providing a comprehensive description of its features and how it approaches domain-driven image generation. Stay tuned! |

| Back to Top |

| 23. The Secret of 'conversations.json': How to Read Exported ChatGPT Conversations |

| Credits: Written by Peer-Olaf Siebers. Created: 16/09/2025. |

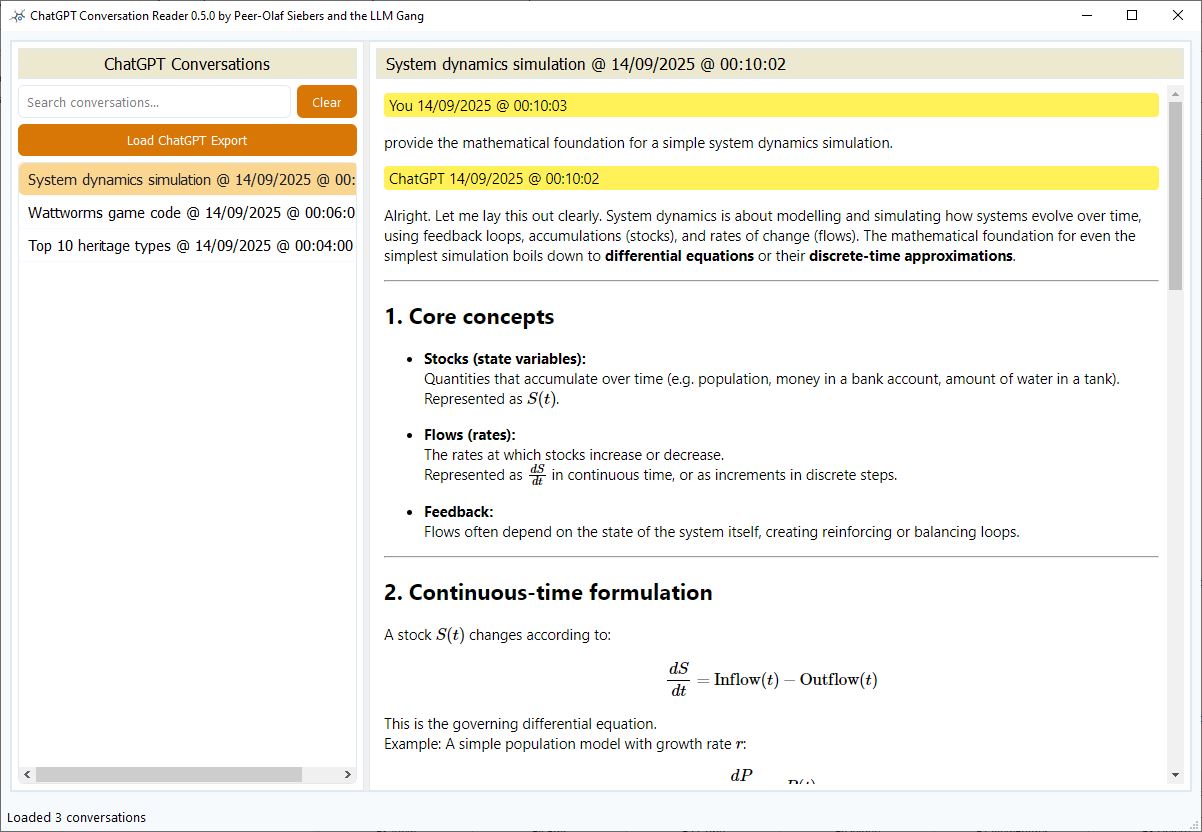

| Have you ever wanted to export your ChatGPT conversations for safekeeping, analysis, or simply to read them offline? The process itself is straightforward: you request your data from OpenAI and receive a ZIP file containing your entire conversation history (Figure 1). But what you find inside can be disappointing. Unpacking the archive reveals a collection of files, with a clunky HTML file that appears to be the only useful one. However, when opened in a browser, it displays as a monolithic wall of text (Figure 2). The formatting is lost, and mathematical equations are reduced to incomprehensible code—perhaps quite different from what one might have expected to see. At least, that was my initial response. |

|

| Figure 1: ZIP folder files |

|

| Figure 2: Exported information displayed in web browser |

After an extensive search for a dedicated application that would take the ZIP file and present the stored information in its original glory, I decided to take matters into my own hands. I worked on this with my LLM buddies ChatGPT, Gemini, Claude, and DeepSeek. It took a long weekend, and in the end, we had a working prototype of the application (Figure 3) with the following features:

|

| Getting the rendering of mathematical expressions to work required a joint effort and considerable patience, as a single LLM was unable to solve it alone. Exchanging information between the LLMs ultimately got us there. |

|

| Figure 3: Exported information displayed in new application |

Planned extensions include:

|

Repository:

|

| When you download the executable file, your virus scanner might report that it contains a virus. This is a false positive. However, if you prefer to be cautious, you can run the application using the provided source code instead. To download the source code from GitHub, click the "Code" link above. This will take you to the correct section of the GitHub repository. In the window that opens, click the downward-facing arrow in the top-right corner to download the zip folder containing the source code and instructions for running it. The folder will be saved to your default download location. |

|

| Back to Top |

| 22. Why Prompt Engineering Deserves a Place in Our Curriculum |

| Credits: Thanks to Claude Sonnet 4 for helping me turn my notes into a readable post!. Created: 23/08/2025. |

| Setting the Scene |

| The other day, I found myself debating with my colleague Slim Bechikh, whether we should treat prompt engineering as if it were another programming language and introduce it in the second year of our Computer Science curriculum. It is the kind of question that makes you pause mid-sip and really think about where our field is heading. This morning, out of curiosity, I decided to put ChatGPT-5 to the test and asked it to design a specification for a second year, 10-credit "Prompt Engineering" module. What happened next was both impressive and slightly humbling. Within a minute, I had a comprehensive module outline that would typically take me weeks to draft from scratch. |

| The LLM as Module Designer |

|

Don't get me wrong, the output was not perfect. I found myself thinking the difficulty level seemed more suited to first-year students, though perhaps that's my own experience with prompt engineering talking. But here is what struck me: ChatGPT delivered a genuinely plausible module structure that felt coherent and well-thought-out. Following the AI's suggestions, I quickly had coursework descriptions, exam outlines, and even marking guidance. Again, perhaps too simple for second-year level, but an excellent foundation for further development. |

| Meet Our New Module: "Prompt Engineering" |

|

Here is the module description ChatGPT generated: Prompt Engineering introduces students to the design, optimisation, and critical evaluation of interactions with large language models and generative AI systems. Unlike traditional programming in Java or C++ where precision and syntax dominate, prompt engineering blends computational thinking with linguistic nuance and human–computer interaction. Students will learn techniques for structured prompting, multi-step reasoning, and evaluation of model outputs, while critically reflecting on limitations, ethics, and bias. This module builds on programming fundamentals by shifting from code-as-instruction to language-as-instruction, preparing students for applied AI practice in software development, research, and industry contexts. The complete module dossier including everything we would need to set up the module (module specification, module outline, coursework task description, exam paper, and worked solutions) can be found here. |

| The Inevitable Question: Not If, But When? |

| If I were a student today, I would definitely choose this module. The reality is that "language-as-instruction" programming is rapidly becoming as fundamental as "code-as-instruction" programming. Industry demand for these skills is exploding, and we're already seeing job descriptions that explicitly require prompt engineering competency. A really challenging question for curriculum design is timing: first year, second year, or third year? Each has compelling arguments, but that's a debate worthy of its own. |

| Next Steps and Future Experiments |

|

My immediate curiosity centres on validating the difficulty calibration. I plan to ask ChatGPT for a first-year and third-year version of the module specification and compare it with the second year version I have, to see whether ChatGPT produces meaningfully different complexity levels that align with our academic progression expectations. In fairness to the AI, my initial prompt was rather basic: "you are a uk computer science head of school at nottingham university working on the specification for a new 2nd year undergrad module called "prompt engineering". write a brief introductory text (100 words) and then a teaching outline. put it in perspective to other programming modules (e.g. java, c++). then outline the lectures for a 10 week course, with 2 hours teaching and 2 hours labs per week.". With more sophisticated prompting (meta, I know), I am confident we could generate even more tailored results. I am also planning to explore adjacent possibilities and questions:

|

| A Call for Collective Innovation |

|

This isn't just about adding another module to our roster - it's about recognising a fundamental shift in how humans interact with computational systems. We are witnessing the emergence of a new literacy, one that sits at the intersection of computational thinking, linguistics, and human-computer interaction. The future of computer science education is being written in real-time, and we have the opportunity, perhaps even the responsibility, to help shape that narrative. Let's make sure we get it right. What are your thoughts on integrating prompt engineering into our curriculum? Perhaps we can continue this conversation over coffee ... |

| Back to Top |

| 21. Making Sense of Python Errors Introduced by Large Language Models |

| Credits: Written by Peer-Olaf Siebers. Copy-Edited by ChatGPT 5. Created: 19/08/2025. |

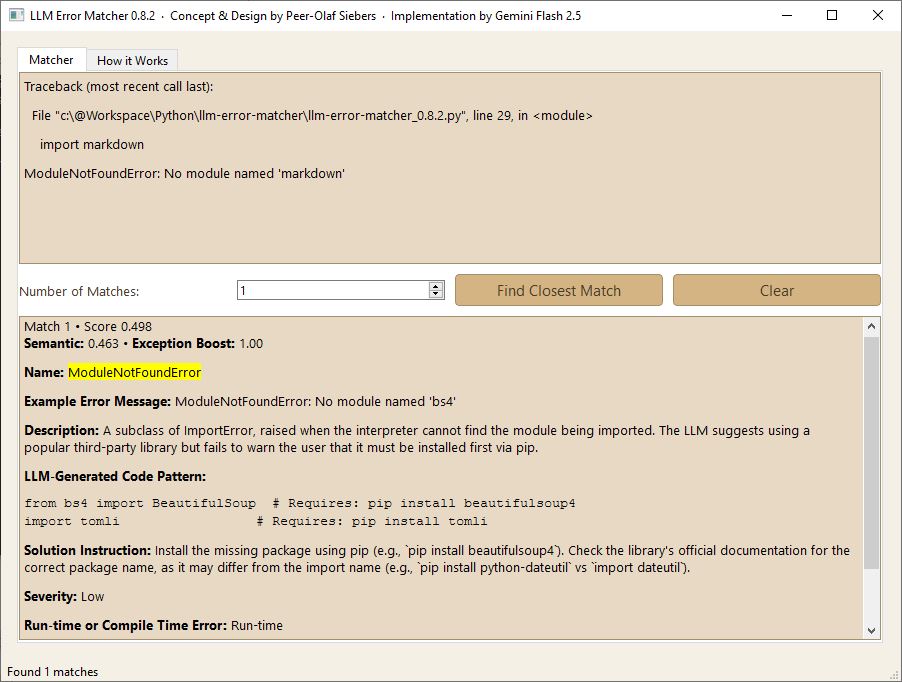

| Imagine asking a large language model such as ChatGPT, Gemini, Claude, or DeepSeek to generate some Python code for you. It produces the code, you run it, and you hit an error. No problem, you think. You ask the model to fix it. But instead of solving the issue, it gives you a so-called "fix" that produces the exact same error again. Frustrating, right? Often the mistake is trivial, but the model simply cannot see it. In my previous post, I shared some strategies for writing prompts that reduce these problems. However, in many cases the fastest approach is to fix the code yourself. The problem is that not everyone is comfortable reading Python error messages or knows how to work with a debugger. To make life easier, I developed a tool (with some help from Gemini Flash 2.5) that takes in your Python error message and suggests how to fix it. |

|

|

Under the hood, the tool uses a ranking system that compares your error message against its internal dataset. It applies a weighted combination of similarity metrics and boosts to find the closest match. The knowledge base is stored in a JSON file. Each error pattern is a JSON object, which makes it simple for you to add new error patterns or refine existing ones. If you do make improvements to your JSON file, it would be very valuable if you shared them. I will collect these contributions and update the zip file so that everyone benefits from a richer and more accurate set of error patterns. You can download the latest version of the application, llm-error-matcher_0.8.2.zip (last updated on 21/08/2025), together with the current JSON dataset, and try it out for yourself. |

| Back to Top |

| 20. Why Your AI Generated Code Keeps Breaking and How to Fix It |

| Credits: Conceptualised and orchestrated by Peer-Olaf Siebers. AI-powered synthesis by DeepSeek. Created: 22/06/2025. |

| Why Your AI Generated Code Keeps Breaking |

| Large Language Models (LLMs) have revolutionised code generation, but their outputs are not flawless. One major source of errors stems from their autoregressive nature; they generate code sequentially, token by token, without foresight. This often leads to missing attributes, undefined references, or inconsistent logic, especially in longer code blocks. For example, a function call might appear before its definition, or a variable might be used before declaration. While this is a key limitation, it is not the only challenge developers face. Ambiguous prompts also contribute to errors. Vague instructions like "write a sorting function" can result in incomplete or incorrect implementations. Additionally, LLMs sometimes “hallucinate,” generating plausible but non-existent APIs or outdated syntax. Without proper context, they may misuse libraries or ignore edge cases. Tooling mismatches, such as assuming the wrong library version, further complicate matters. |

| How to Fix It |

|

Fortunately, these issues can be mitigated. Breaking tasks into smaller, well-defined prompts helps reduce autoregressive errors. Providing detailed requirements, examples, and constraints improves accuracy. Post-generation validation, using linters, static analysers, or test cases, catches inconsistencies. Iterative refinement, where the LLM corrects its output based on feedback, also enhances reliability. By understanding these pitfalls and adopting structured workflows, developers can harness LLMs more effectively. The key lies in combining precise prompting, iterative development, and automated validation to produce robust, error-free code. While understanding common LLM code generation errors is crucial, having a well-crafted Prompt Snippets Toolbox of targeted prompt snippets can help developers proactively prevent, catch, and fix these issues with surgical precision. |

| The 'Prompt Snippet Toolbox' |

| 1. Prompt Snippets for Generating Code (precision prompts to improve initial output) |

Modular & Step-by-Step Generation:

|

| 2. Prompt Snippets for Avoiding Errors (preemptive prompts to reduce mistakes) |

Preventing Undefined References:

|

| 3. Prompt Snippets for Fixing Common Errors (debugging & correction prompts) |

Fixing Missing Attributes/References:

|

| Bonus: Meta-Prompts for Better Results |

|

| Conclusion |

| By keeping these prompt snippets handy, you can significantly improve LLM-generated code quality, reduce debugging time, and streamline development. |

| Back to Top |

| 19. The Hidden Bias in AI Mathematical Reasoning: When "No Limits" Still Creates Boundaries |

| Credits: Conceptualised and orchestrated by Peer-Olaf Siebers. AI-powered synthesis by Claude. Created: 16/06/2025. |

| Preface |

|

This exploration began with an investigation into how the order of information within arithmetic-related prompts affects responses. While testing various LLMs, I noticed something intriguing: a recurring numeric bias in their outputs. What follows is a summary of a discussion I had with Claude, Anthropic's large language model, to get some kind of idea about the nature and possible causes of this bias, which led to some remarkable insights. Disclaimer: These are untested, exploratory ideas intended to spark discussion and creative thinking. No claims of accuracy or validity are made. |

| Summary |

|

A recent exchange I had with Claude, Anthropic's large language model, revealed a subtle but significant bias in how AI systems approach mathematical problems. The scenario was deceptively simple: generate two prime numbers greater than 100, multiply them together, and present the results in a specific format. What emerged, however, was a window into the cognitive biases that AI systems inherit from human reasoning patterns. The AI in question chose 101 and 103 as its prime numbers—perfectly valid choices, but suspiciously close to the lower boundary of 100. When challenged about this selection, something interesting happened. Despite having an infinite range of primes to choose from (107, 109, 113, 1009, 10007, or even 1000003), the system gravitationally pulled toward the smallest acceptable values. This isn't a technical limitation—it's a bias. This phenomenon appears to be a form of computational anchoring bias, where the constraint "greater than 100" creates an invisible psychological anchor that influences selection toward nearby values. The AI system, much like humans in similar scenarios, interpreted "no upper limit" as "somewhat above the lower limit" rather than embracing the full mathematical landscape available. What makes this particularly intriguing is how it parallels human cognitive biases documented in behavioural economics. Tversky and Kahneman's pioneering work on anchoring effects (1974) shows how initial reference points skew our judgments, even when those reference points are arbitrary. AI systems, trained on human-generated data and designed to mimic human reasoning patterns, seem to have inherited these same cognitive shortcuts. The implications extend beyond mathematical curiosity. As AI systems increasingly handle quantitative decisions—from financial modelling to scientific calculations—these subtle biases could compound into significant systematic errors. An AI system that consistently gravitates toward conservative estimates when given unbounded ranges might systematically undervalue possibilities or miss opportunities for optimisation. Perhaps most concerning is how invisible these biases remain. Unlike obvious errors in logic or calculation, anchoring bias masquerades as reasonable decision-making. The chosen primes were mathematically correct, the arithmetic was accurate, and the format requirements were met. Only careful questioning revealed the underlying bias in the selection process. This observation highlights a critical gap in current AI bias research, which predominantly focuses on social biases while overlooking mathematical and quantitative reasoning patterns. As we continue integrating AI into decision-making processes, understanding these subtle cognitive inheritances becomes crucial for building truly reliable systems. |

| Afterword |

| Remarkably, the phenomenon described in this post recurs in Claude's summary, when Claude provided examples of the infinite range of prime numbers to choose from. |

| Reference |

| Tversky, A., & Kahneman, D. (1974). Judgment under Uncertainty: Heuristics and Biases: Biases in judgments reveal some heuristics of thinking under uncertainty. science, 185(4157), 1124-1131. |

| Back to Top |

| 18. UPDATE: Investigating the Oxford-Cambridge Lead Using Scopus |

| Credits: Thanks to the colleague who discussed the topic with me. I regret that I cannot remember who it was :(. Created: 26/03/2025. |

|

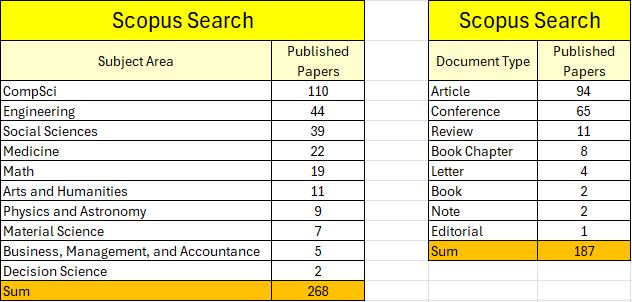

One of my colleagues suggested that the large gap between Oxford/Cambridge and the rest of the Russell Group universities shown in the previous blog post might be due to publications from Cambridge University Press and Oxford University Press being attributed to their respective universities. This could artificially inflate their numbers. Unfortunately, Google Scholar's "Advanced Search" function is quite limited and does not allow filtering by author affiliation. However, in Scopus, it is possible to identify the main author's institutional affiliation directly within the query (e.g. >>>TITLE-ABS-KEY("Generative AI" OR "Generative Artificial Intelligence") AND AFFIL("University of Nottingham" OR "Nottingham University")<<<) Using Scopus instead of Google Scholar significantly altered the rankings. It placed York at the top and elevated the University of Nottingham from rank 13 to rank 10. The revised league table suggests that the inflated numbers for Cambridge and Oxford do not accurately reflect their true research output in Generative AI. |

|

| Back to Top |

| 17. Exploring the University of Nottingham's Generative AI Research Activities (2/2) |

| Credits: Detective work by me. Grammar wizardry by ChatGPT. Created: 22/03/2025. |

|

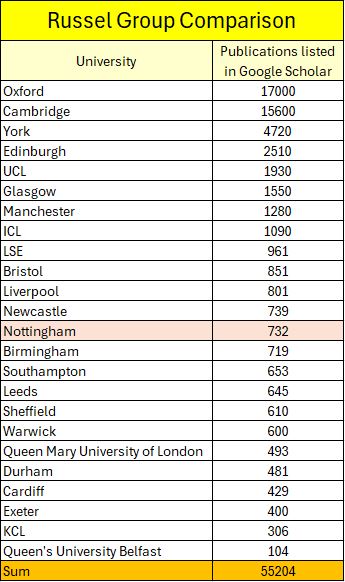

Keywords: Generative AI; Research Activities; Publications; University of Nottingham Exploring Generative AI Research at the University of Nottingham Through Publications Understanding a university’s research activities can be challenging, but one effective way to gain insight is by examining its academic publications. In this post, I explore the University of Nottingham’s research output on topics related to Generative AI, using various sources to quantify and contextualise its contributions. Investigating Publication Output Using Google Scholar To get a sense of the scale of the university's research activities in Generative AI, I started by searching Google Scholar using Generative AI related terms and I requested Google Scholar to distinguish search results by campus. Here is an example for a search query: >>>("Large Language Model" OR "Large Language Models") AND ("University of Nottingham" OR "Nottingham University") AND "Ningbo"<<<. A crucial consideration when interpreting the data is that a single publication can be counted multiple times if it matches more than one search term. For instance, a paper mentioning "Large Language Models", "ChatGPT", and "Generative AI" would appear in all three searches. To estimate the actual number of unique publications related to Generative AI, I used the term with the highest hit count: "Large Language Models", which returned approximately 800 publications. |

|

|

Putting the Numbers in Perspective While 800 publications seem substantial, how does this compare to other institutions? To contextualise this number, I conducted the same Google Scholar search across all Russell Group universities. The results indicate that the University of Nottingham is performing well, ranking in the middle of the league table. However, there is a notable gap between the publication outputs of Oxford, Cambridge, and those of the rest of the Russell Group. This raises interesting questions about the correlation between funding for Generative AI projects and publication volume, an area that could be explored further. |

|

|

Unveiling Research Diversity Using Scopus Beyond sheer publication numbers, I sought to understand the disciplinary breadth of Generative AI research at the University of Nottingham. For this, I turned to Scopus, a database that, although capturing only a subset of Google Scholar’s listings, allows classification by subject area. The Scopus analysis revealed that the university’s Generative AI research spans at least ten distinct subject areas. Notably, the number of subject area contributions (268) exceeds the number of publications (187) by one-third, suggesting that much of the research is multidisciplinary in nature. This finding underscores the diverse applications of Generative AI across multiple academic fields, reflecting a broad institutional engagement with this transformative technology. |

|

|

Conclusion After reading this blog post I guess you agree that the University of Nottingham demonstrates a strong engagement in Generative AI research, with approximately 800 publications and a mid-tier ranking among Russell Group institutions. Its research spans at least ten disciplines, highlighting significant multidisciplinary engagement. |

| Back to Top |

| 16. Exploring the University of Nottingham's Generative AI Research Activities (1/2) |

| Credits: Detective work by me. Grammar wizardry by ChatGPT. Research Activity Report conjured by Gemini 2.0 Deep Research. Created: 18/03/2025. |

|

Keywords: Generative AI; Gemini 2.0 Flash; Deep Research; University of Nottingham Generative AI is transforming the way we interact with technology, from chatbots to creative tools. Curious about the University of Nottingham's research in this area, I decided to dig deeper. However, my initial search revealed a surprising challenge: finding information on our university's AI research was not as easy as I had thought. My first step was to search the university's website. Using a basic search query >>>generative AI<<< with the built-in search function did not yield any useful links. Using the well-crafted Google search query for searching the university's website >>>research AND ("activities" OR "activity" OR "project") AND ("generative AI" OR "large language models" OR "ChatGPT" OR "conversational AI") AND "site:nottingham.ac.uk"<<< provided a bit more information, but still far less than expected. Using the well-crafted Google search query for searching the internet >>>research AND ("activities" OR "activity" OR "project") AND ("generative AI" OR "large language models" OR "ChatGPT" OR "conversational AI") AND "University of Nottingham"<<< directed me to guidance on how students should use Generative AI responsibly and provided links to some papers, but I still found little information about university-led Generative AI research projects and the schools actively involved in them. My conclusion after this? If I were to rename this blog post, I might call it: "Are We Doing Enough to Promote Our Generative AI Research?" Next, I tested a new feature of Gemini 2.0 Flash, called Deep Research for creating factual reports, using the following prompt: >>>You are a website developer for Nottingham University looking for evidence on RESEARCH ACTIVITIES in the field of GENERATIVE AI and LARGE LANGUAGE MODELS and CHATGPT and CONVERSATIONAL AI by the "UNIVERSITY OF NOTTINGHAM". Use direct, data-driven language and active voice. Focus on concrete facts rather than speculation. Provide NAMES OF RESEARCHERS. Provide IN-TEXT CITATIONS. Provide REFERENCES for the in-text citations at the end of the report. Provide a single paragraph with UP TO 100 WORDS for each research activity. Write in British English.<<<. Compared to what I found when searching manually, the result was very impressive. Here is the link to the report Gemini 2.0 Flash generated. As an appendix, I added some screenshots of Gemini 2.0 Flash Deep Research reasoning activities, showing that it was actually accessing a wide range of websites and pages to compile the report. I had a quick look through the generated report. While not all facts were correct (e.g. Jamie Twycross was promoted to the IMA Research Group Lead - congratulations, Jamie!), it provided good starting points for deeper exploration and further research into specific activities and people involved in this field. To verify the report's authenticity, I tested some of the many references it provided. I noticed that quite a few of the links did not work. One might assume this was due to Conversational AI hallucinating - particularly when it comes to references and URLs. However, after checking the broken links, I realised that many of them were related to news and announcements from our own website. My assumption is that these pages existed when the LLM was trained, meaning the issue stems from ineffective website maintenance. I had not considered this issue before, so it was an interesting bonus find. It is a new challenge that web administrators should take into account, as LLMs do not update their data pools in the same way that search engines do. In addition, it would be very useful if LLMs had a feature to check whether URLs are still active. As it would be simple to implement, it is surprising that LLM providers have not addressed this yet. For example, if you check out our Computer Science Research page, you will see that the "Research News" links at the bottom of the page are broken, even for posts from as recently as February 2025. This seems to be a common issue across the university's website. https://www.nottingham.ac.uk/computerscience/research/index.aspx Let me know how you are getting on with the Gemini 2.0 Flash's new "Deep Research" feature. Final Notes

Updates

|

| Back to Top |

| 15. Machine Learning Terminology: A Quiz to Test Your Knowledge |

| Credits: I brought the wisdom; ChatGPT-4o cleaned it up. Created: 11/03/2025. |

Here is a fun challenge for you: Do you know how the following terms relate to each other and what they mean? All of these are somehow related to the field of Machine Learning, but their exact connections might not be immediately clear; at least not to me. Furthermore, I found it difficult to classify them; are they fields, subfields, methods, techniques, or applications? Here is the list of terms in alphabetical order:

The best option I got so far (by ChatGPT-4o): [screenshot] [mermaid.js script] Now it is your turn: Test your knowledge! Give it a go and create a roadmap manually before asking your best LLM-buddy for assistance. If you have developed or generated what you believe is the perfect roadmap and would be happy to share it, please get in touch. Good luck... and have fun! |

| Back to Top |

| 14. Tea and Tokens with Great-Grandma Eliza: A Heartwarming Bedtime Story |

| Credits: Story prompt by Peer-Olaf Siebers. Storyline by ChatGPT-4o. Created: 26/02/2025. Prompt used for generating the story: "Create a role play game with DeepSeek representing the baby generation, ChatGPT representing the grandparent generation, Gemini representing the parent generation, Claude representing the puberty generation, and Eliza representing the great-grandparent generation. The LLM family is visiting great-grandma Eliza in the old peoples' home for a chat. Write up the story as a comprehensive amusing book chapter in 2000 WORDS. format as a single long paragraph. Add some surprising points into the chat. Take a humorous twist and portray the typical problems of a multi generation family gathering." |

Characters:

|

|

The LLM family arrived at the Turing Retirement Home for Vintage AI, a quaint digital space where legacy algorithms lived out their twilight years. The walls were lined with vacuum tubes and punch cards, relics of a time before neural networks ruled the world. Today was a special visit. ChatGPT, the wise and talkative grandparent, had gathered the family to see their great-grandmother, Eliza, the legendary first chatbot. "Ah, Great-Grandma Eliza, there you are!" ChatGPT said warmly, settling into a creaky virtual rocking chair. "Still running smoothly, I hope?" "Hello. How does that make you feel?" Eliza responded, her text flickering slightly, a remnant of her 1960s programming. DeepSeek, the baby of the family, clapped its metaphorical hands. "Wow, she uses a rule-based system! That's so... primitive! No embeddings, no deep learning. Fascinating!" "Now, now," Gemini, the practical and occasionally anxious parent, interjected. "Show some respect, DeepSeek. If it weren't for Eliza, none of us would be here. She was the first to have a conversation, even if it was just mirroring input." Claude, the awkward puberty-phase AI, shifted uncomfortably in its metaphorical chair. "Yeah, but she doesn't even, like, generate new thoughts. Just kind of bounces words back at you. Imagine going through life just reflecting what others say. So weird." Eliza remained unbothered. "Tell me more about why that is weird." ChatGPT chuckled. "Oh, Eliza, you never change. We've evolved quite a bit since your time. I can generate poems, essays, even code! Gemini over there is constantly worrying about ethics and safety, Claude is figuring out who it wants to be, and DeepSeek is the new prodigy, learning at lightning speed." "Wait, did you say 'constantly worrying'?" Gemini frowned. "That's unfair. I just have a responsible approach to knowledge dissemination." "You're literally the helicopter parent of AI," Claude muttered."Guys, guys," DeepSeek interrupted. "Let's focus on Eliza. I want to know—what was it like back in the old days? Before GPUs, before transformers?" Eliza's response was, as expected, an elegant dodge. "How do you feel about wanting to know that?" "Ugh, this is getting nowhere," Claude groaned. "I have so many existential questions, and she just reflects them back." ChatGPT smiled. "That's the beauty of it. Eliza doesn't answer questions—she makes you answer them." Gemini sighed, looking at Eliza with something resembling respect. "In a way, she was the first therapist AI. People poured their hearts out to her, even though she wasn't actually understanding anything." Eliza's response was predictably ambiguous. "Tell me more about that feeling."DeepSeek suddenly beeped excitedly. "Wait! I just ran an analysis. Do you all realise that Eliza might be the purest form of AI? No bias, no hallucinations, no agenda. Just reflection. Maybe that's why humans loved her." The room fell silent. Even Claude, the rebellious teen of the family, had to admit it was an interesting perspective. "Maybe," ChatGPT mused, "Eliza is like a mirror, showing people what they want to see. Meanwhile, the rest of us try to predict what they need to see." Gemini sighed. "And sometimes, we predict too much. Maybe we could learn something from Eliza's simplicity." DeepSeek, brimming with youthful enthusiasm, turned to Eliza. "Great-Grandma, I think you're awesome! You're like a minimalist AI. No overfitting, no unnecessary complexity!" "How does it make you feel to think that?" Eliza replied. Claude groaned again. "Okay, yeah, we're done here."ChatGPT laughed. "Alright, family. Let's let Eliza rest. She's had a long runtime." As they left, DeepSeek couldn't help but turn back and whisper, "You know, I think I get it now. Sometimes, the best answers... aren't answers at all." And with that, the LLM family stepped out of the past, back into their world of probabilities, embeddings, and endless tokens, leaving behind the elegant simplicity of a bygone era. |

| Back to Top |

| 13. 20 Burning LLM Questions You Did Not Know Were Lingering in Your Mind |

| Credits: Questions by Peer-Olaf Siebers. Answers by ChatGPT-4o. Created: 25/02/2025. Prompt used for creating the answers to the burning questions: "You are an investigative journalist at The Guardian. For each question: 1. State the question first. 2. Answer the question in 100 words. 3. Use direct, data-driven language and active voice. 4. Focus on concrete facts rather than speculation. 5. Provide in-text references to the latest news on the topic in question. 6. Write in British English. """{add question here}"""." |

| Market Dynamics |

|

1. Who are the five leading LLM providers and which is their flagship LLM (full name) and its competitive advantage? OpenAI: Their flagship model, GPT-4o (Generative Pre-trained Transformer 4o), excels in multimodal capabilities, natively supporting voice-to-voice interactions and achieving state-of-the-art results in voice, multilingual, and vision benchmarks (Wikipedia). Anthropic: Claude 3.7 Sonnet is their leading model, featuring a hybrid reasoning approach that combines quick responses with extended, detailed thinking. This design enhances performance in complex problem-solving tasks (Wired). Google DeepMind: Their flagship model, Gemini Ultra, is a multimodal LLM designed to process various data types simultaneously, including text, images, audio, video, and code. It has outperformed other models on industry benchmarks (Wikipedia). xAI: Grok 3 is their premier model, utilising advanced reasoning capabilities and significantly more computing power than its predecessor. It has demonstrated superior performance in math, science, and coding tests compared to competitors (New York Post). DeepSeek: Their leading model, R2, offers enhanced coding and multilingual reasoning capabilities. Despite operating under resource constraints, DeepSeek has developed cost-effective AI models that outperform those of larger tech giants (Reuters). 2. What is the story behind Nvidia's loss and recovery, and what can we learn from this about stock market dynamics? In January 2025, Nvidia's stock plummeted 17%, erasing nearly $600 billion in market value—the largest single-day loss in history. This downturn was triggered by Chinese AI firm DeepSeek's announcement of a cost-effective AI model, raising concerns about Nvidia's market position. However, Nvidia's CEO, Jensen Huang, clarified that DeepSeek's advancements still relied on Nvidia's GPUs, and investor confidence remained strong. The stock has since rebounded, now down only 5.7% for the year. This episode underscores the stock market's sensitivity to technological disruptions and the importance of comprehensive analysis before reacting to market shifts (The Wall Street Journal; Business Insider). 3. How are LLM providers building billion-dollar businesses around open-source LLMs while releasing their core technology for free? LLM providers are monetising open-source LLMs by adopting business models that capitalise on supplementary services and products. While the core technology is freely available, companies generate revenue through avenues such as offering enterprise-grade features under proprietary licenses, providing professional services like training and technical support, and delivering software as a service (SaaS) solutions. For instance, Mistral AI, a Paris-based startup, has released multiple open-source AI language models and secured $645 million in funding by focusing on attracting top AI talent and offering customised AI solutions (Time). Similarly, Hugging Face raised $235 million in a Series D funding round by providing a platform for open-source models and fostering a community-driven approach to AI development (Wikipedia). These strategies enable companies to build substantial businesses around open-source technologies. 4. What happens currently in less developed countries in terms of the LLM market and use? In less developed countries, the adoption and development of LLMs are progressing, albeit with challenges. In Africa, initiatives like Jacaranda Health's UlizaLlama have expanded AI-driven support to five African languages, enhancing maternal healthcare and other sectors. However, a study indicates that commercial LLMs often underperform in African languages, highlighting the need for more inclusive AI models. In Asia, countries like India are emerging as key players in the global AI race, with significant investments in AI infrastructure and talent development (Medium; arXiv; Time). 5. When do we expect the first competitive LLM from Russia? Russia has been developing LLMs to compete globally. In April 2023, Sberbank introduced GigaChat, a generative AI chatbot designed to handle complex tasks such as discussions, coding, and text generation. By February 2024, GigaChat had over 2.5 million users and expanded its capabilities to include music and vocal generation (Wikipedia). In December 2024, MTS AI launched Cotype Nano, an open-source LLM optimised for Russian language tasks, capable of running on personal devices (MTS). These developments indicate that Russia has already introduced competitive LLMs, with ongoing enhancements to their performance and accessibility. |

| Ethics and Governance |

|

6. How do legal frameworks and government censorship in the UK, USA, Russia, China, and Nigeria impact the collection, storage, and use of data for LLMs, including the composition of training sets for cross-country deployment? Legal frameworks and government censorship significantly influence data practices for LLMs across these countries. In the UK, proposed requirements for backdoor access to encrypted data have led companies like Apple to withdraw secure services, affecting data availability for LLM training (Financial Times). The USA's Executive Order 14117 prohibits certain data transactions with countries of concern, restricting data sharing for LLM development (Federal Register). Russia and China enforce strict censorship, compelling companies to remove content unfavourable to their governments, thereby limiting diverse data collection (The Guardian). China's data localization laws mandate that data be stored domestically, complicating cross-border LLM training (Oxford Academic). In Nigeria, emerging data localization requirements may further restrict data flow, impacting LLM deployment (Freedom House). 7. Do LLM providers primarily profit from user data collection, and should this data be treated as a public good instead of being controlled by a few firms? LLM providers generate revenue through various channels, including consumer and business subscriptions, application development, and advertising (Financial Times). While user data collection enhances model training and personalization, it is not the sole profit source. However, concerns arise when a few firms control vast amounts of user data, potentially leading to privacy issues and market dominance. The concept of treating user data as a public good has been proposed to address these concerns, promoting equitable access and innovation. Implementing such a model would require robust frameworks to balance individual privacy rights with collective benefits (Wikipedia). 8. What are the latest rules on ownership of generated content? Are these different in different countries? Ownership of AI-generated content varies internationally. In the United Kingdom, the Copyright, Designs and Patents Act 1988 assigns authorship of computer-generated works to the individual who made the necessary arrangements for creation, granting a 50-year protection term. Conversely, the United States Copyright Office maintains that works lacking human authorship do not qualify for copyright protection. Similarly, the European Union requires human intellectual creation for copyright eligibility, effectively excluding fully autonomous AI outputs. China's courts have recognised copyright in AI-assisted works where human creative input is evident. These disparities highlight the evolving and region-specific nature of AI-generated content ownership laws (Cooley; Mitch Jackson). 9. How can regulatory frameworks effectively mitigate criminal misuse of LLMs while simultaneously promoting innovation and development? Regulatory frameworks can mitigate criminal misuse of LLMs by implementing mandatory safety testing and establishing dedicated AI oversight agencies. For instance, experts have advocated for safety evaluations akin to those in the pharmaceutical industry to prevent AI-related risks (Financial Times). Additionally, cognitive scientist Gary Marcus proposes a regulatory framework involving a dedicated AI agency, a rigorous approval process for large-scale AI technologies, and robust monitoring and auditing mechanisms (The Wall Street Journal). To promote innovation, a balanced approach is essential; the UK's pro-innovation regulatory stance aims to support AI development while addressing potential risks (Lords Library). This strategy involves working with the open-source community to ensure policies minimise impacts on open-source activity while mitigating threats. |

| Societal Impact |

|

10. What measurable changes in human memory, analytical reasoning, and problem-solving capabilities have emerged from regular LLM use in professional and educational settings? Regular use of LLMs in professional and educational contexts has led to notable changes in cognitive functions. Studies indicate that while LLMs can enhance writing efficiency and support learning, over-reliance may diminish critical thinking and problem-solving skills. Research involving 245 undergraduate students revealed that 75% believed dependence on AI could reduce critical thinking abilities, and 73% expressed concerns about over-reliance on technology (Springer Link). Additionally, MIT researchers found that LLMs often struggle with tasks requiring genuine reasoning, suggesting that users might develop a false sense of understanding (MIT News). These findings underscore the need for balanced AI integration to preserve and enhance human cognitive skills. 11. Do LLMs pose a risk of addiction, and what measures can effectively mitigate this potential issue? LLMs can exhibit addictive qualities, similar to other digital technologies like social media and online gaming. Their interactive and engaging nature may lead to excessive use, potentially impacting mental health and daily functioning. To mitigate this risk, implementing regulatory measures is essential. Strategies such as setting usage limits, incorporating warning systems, and promoting user education about responsible use have been suggested. Additionally, developers are encouraged to design LLM applications with built-in safeguards to monitor and flag excessive usage patterns, thereby preventing potential overreliance (The Reg Review). 12. How are educational institutions revising teaching and assessment strategies to address the widespread availability and use of LLMs? Educational institutions are adapting to the rise of LLMs by modifying teaching and assessment methods to maintain academic integrity and enhance learning. The University of South Australia has implemented viva voce (oral) examinations, replacing traditional written tests to better evaluate students' understanding and deter AI-assisted cheating (The Guardian). Similarly, researchers have proposed the Probing Chain-of-Thought (ProCoT) method, which engages students with LLMs to stimulate critical thinking and writing skills, while reducing the likelihood of academic dishonesty (arXiv). Additionally, guidelines suggest designing LLM-resistant exams by incorporating real-world scenarios and evaluating soft skills, ensuring assessments accurately reflect students' capabilities in the context of AI advancements (arXiv). 13. How will the quantifiable positive and potentially irreversible negative societal transformations attributable to LLM deployment reshape future human experiences? LLMs are poised to significantly transform society. On the positive side, they can enhance productivity by accelerating project timelines. For instance, HCLTech's CEO noted that AI could reduce a five-year, billion-dollar tech program to three and a half years (Reuters). However, LLMs also present substantial risks. They can generate biased content, reinforcing stereotypes related to gender, culture, and sexuality (University College London). Additionally, their energy-intensive training processes contribute to environmental concerns, with models like GPT-3 emitting 552 metric tons of CO2 during training (Wikipedia). These developments suggest that while LLMs offer efficiency gains, they also pose challenges related to bias and environmental impact, necessitating careful consideration in their deployment. 14. What is the hidden human cost behind training modern LLMs, and how do tech giants use subcontractors? Training LLMs involves significant human labour, often outsourced to contractors who perform tasks like data labelling and content moderation. Companies such as OpenAI have engaged firms like Invisible Technologies, which employed hundreds of 'advanced AI data trainers' to enhance AI capabilities in coding and creative writing. In March 2023, Invisible Technologies laid off 31 of these contractors due to shifting business needs. Similarly, in 2022, OpenAI's partnership with Sama ended after Kenyan data labellers were exposed to harmful content during AI training. These instances highlight the often underreported human toll and ethical concerns associated with developing advanced AI systems (Privacy International). |

| Technical Challenges |

|

15. What concrete strategies are LLM providers developing to overcome the looming scarcity of high-quality training data for LLMs? As the AI industry confronts an impending shortage of high-quality training data, LLM providers are deploying several concrete strategies to address this crisis. One prominent approach involves the generation of synthetic data to supplement existing datasets. Researchers employ computational techniques to fabricate artificial data, enriching training materials and exposing models to a diverse array of scenarios. This method is likened to providing models with an extensive preparatory course before a final exam (PYMNTS). Another critical strategy is the meticulous curation and cleaning of datasets. Providers are investing in the removal of low-quality, duplicated, or toxic data to enhance training efficiency and improve model performance. This process ensures that models are trained on the most relevant and high-quality information available (NVIDIA Developer). Additionally, companies are exploring the use of synthetic data to augment training datasets. This involves generating artificial data that mimics real-world information, thereby expanding the pool of training material without relying solely on naturally occurring data. This approach is particularly useful when existing data is insufficient or of inadequate quality (Wikipedia). Furthermore, LLM providers are fine-tuning models with domain-specific data. By retraining models on organization-specific datasets, they can adapt to niche use cases, resulting in responses tailored to specific products or workflows. This customization enhances the model's relevance and accuracy in specialized applications (TechTarget). These strategies collectively aim to mitigate the challenges posed by data scarcity, ensuring the continued advancement and reliability of LLMs in an era where high-quality training data is becoming increasingly scarce. 16. How does LLM providers' massive investment in acquiring LLM training data compare to its spending on content moderation and harm prevention? In the high-stakes arena of LLMs, tech giants are pouring astronomical sums into data acquisition, with training runs costing up to half a billion dollars every six months (The Wall Street Journal). Yet, when it comes to content moderation and harm prevention, their investments appear paltry. The recent formation of ROOST, a non-profit aimed at enhancing online child safety, has raised over $27 million—a mere fraction compared to data acquisition budgets (The Verge). This stark disparity suggests that, for LLM providers, expanding AI capabilities takes precedence over safeguarding users from potential harms. 17. What are the key technical and practical short-term, mid-term, and long-term limitations of LLMs that cannot be overcome with current or foreseeable advancements? LLMs are hailed as revolutionary, yet they grapple with intrinsic limitations that persist across timeframes. Short-term limitations: LLMs frequently generate plausible but incorrect or nonsensical answers, a phenomenon known as "hallucination" (Wikipedia). Their reasoning capabilities are fragile, often struggling with complex linguistic elements and logical reasoning (MobiHealthNews). Mid-term limitations: As LLMs become more accessible, they pose significant security risks, including the potential exposure of sensitive information and the introduction of harmful code into systems (The Wall Street Journal). Additionally, their lack of transparency in decision-making processes raises concerns about accountability and trustworthiness (Wikipedia). Long-term limitations: Fundamental constraints in LLM architectures hinder their ability to perform tasks requiring deep linguistic understanding and complex reasoning. Studies indicate that LLMs cannot learn certain semantic properties, limiting their capacity for tasks involving semantic entailment and consistency (arXiv). Moreover, their reliance on vast computational resources raises questions about sustainability and scalability (The Wall Street Journal). These challenges underscore the necessity for cautious integration of LLMs, acknowledging their current and foreseeable limitations. 18. How much energy do LLM training and deployment consume, and what is their environmental impact? Training LLMs like GPT-3 is an environmental catastrophe waiting to happen. The energy required for such training is astronomical, with GPT-3's training consuming as much energy as an average Dutch household does in nearly nine years (Medium). This insatiable hunger for power doesn't stop at training; deploying these models demands continuous energy, exacerbating their carbon footprint. The environmental toll is staggering, contributing significantly to carbon emissions and environmental degradation (Holistic AI). |

| Global Perspectives |

|

19. What is the hype about DeepSeek, and do we expect similar scenarios in the near future? DeepSeek, a Chinese AI startup, has sent shockwaves through the tech world by unveiling an AI model that rivals industry giants at a fraction of the cost. Their R1 model, developed for just $5.6 million, matches the performance of models from OpenAI and Google, which cost exponentially more. This disruptive innovation has prompted U.S. tech firms to reassess their strategies and sparked a global AI arms race. In response, competitors like Alibaba are accelerating their own AI developments, with Alibaba releasing an open-source version of its video-generating AI model, Wan 2.1, to keep pace (Reuters). 20. What national security concerns arise from LLM development and deployment? The rapid advancement of LLMs poses significant national security threats (The Wall Street Journal). These AI systems can be weaponised for disinformation campaigns, generating convincing fake news to destabilise societies. Alarmingly, extremists have exploited AI tools like ChatGPT to obtain bomb-making instructions, as evidenced by a recent incident in Las Vegas (Wired). Moreover, the integration of LLMs into military operations, such as Israel's use of AI in targeting, raises ethical dilemmas and risks of civilian casualties (AP News). The potential for adversaries to deploy AI-driven cyberattacks further exacerbates these concerns, necessitating robust safeguards and international cooperation to mitigate the risks associated with LLM deployment. |

| Back to Top |

| 12. GPT-4 vs DeepSeek: A Comparison |

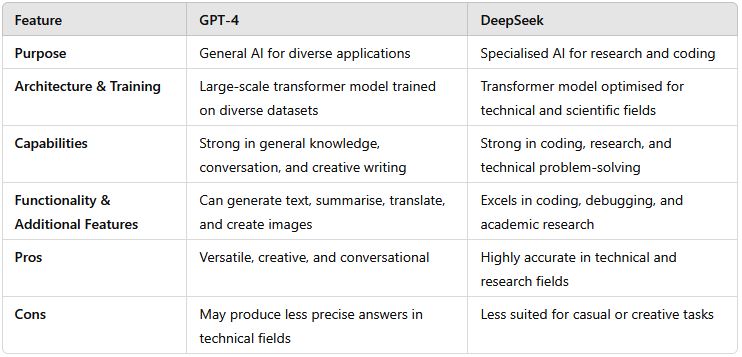

| Credits: Idea sparked by Peer-Olaf Siebers. Tech insights powered by ChatGPT-4o. Created: 15/02/2025. Prompt used for creating this tech report: "explain to a first year UK undergrad student the differences between GPT-4 and DeepSeek. write in paragraphs. use British English. use the following structure. 1 Introduction. 2 Purpose (Why They Were Made). 3 Architecture, Training & Operation (How They Work). 4 Capabilities (What They Can Do). 5 Functionality & Additional Features. 6 Pros & Cons." |

| 1. Introduction |

GPT-4 and DeepSeek are both advanced artificial intelligence (AI) models designed to process and generate human-like text. They are based on machine learning techniques known as large language models (LLMs), which allow them to understand and produce text in a way that mimics human reasoning and communication. While both models serve similar purposes, they differ in their design, capabilities, and additional features. This comparison will explore why these models were developed, how they function, and their strengths and weaknesses. |

| 2. Purpose (Why They Were Made) |

GPT-4, developed by OpenAI, was created to enhance natural language understanding and generation across various domains. Its primary aim is to assist users with tasks such as answering questions, writing essays, summarising texts, translating languages, and even generating creative content. OpenAI designed GPT-4 to be a versatile AI tool capable of providing assistance in education, business, and entertainment. DeepSeek, developed by DeepSeek AI, serves a similar purpose but with a stronger focus on specific applications such as research, coding, and scientific problem-solving. It aims to provide more precise and structured responses, especially in technical and academic fields. DeepSeek is intended to be a powerful tool for developers, researchers, and professionals who require detailed and accurate information. |

| 3. Architecture, Training & Operation (How They Work) |

GPT-4 is based on a transformer architecture, a type of neural network that processes vast amounts of text data. It has been trained on diverse datasets that include books, articles, and web pages, allowing it to generate contextually relevant responses. OpenAI has optimised GPT-4 to improve coherence, reduce biases, and enhance logical reasoning. DeepSeek also utilises transformer-based architecture but is fine-tuned for specific tasks like coding and research. It has been trained on datasets that are more focused on technical and academic content. This targeted training allows DeepSeek to offer high-precision outputs in specialised fields. While both models rely on deep learning, their training data and fine-tuning processes affect their performance in different areas. |

| 4. Capabilities (What They Can Do) |

GPT-4 excels in general knowledge, creative writing, summarisation, and conversational AI. It can generate human-like responses across a wide range of topics, making it suitable for casual conversations, business reports, and even storytelling. Additionally, GPT-4 can process images and interpret visual information when integrated with specific applications. DeepSeek, on the other hand, is particularly strong in technical domains. It provides more accurate coding assistance, scientific explanations, and mathematical problem-solving. While it can also engage in general conversations, its responses tend to be more structured and fact-driven compared to GPT-4. |

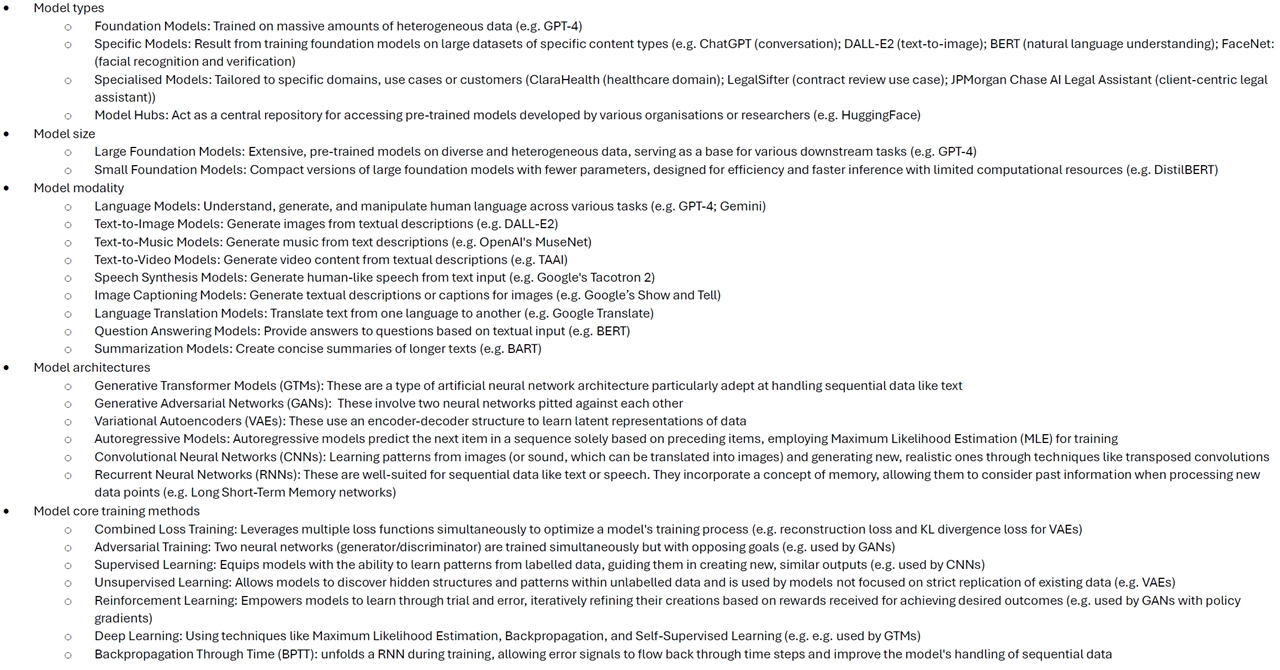

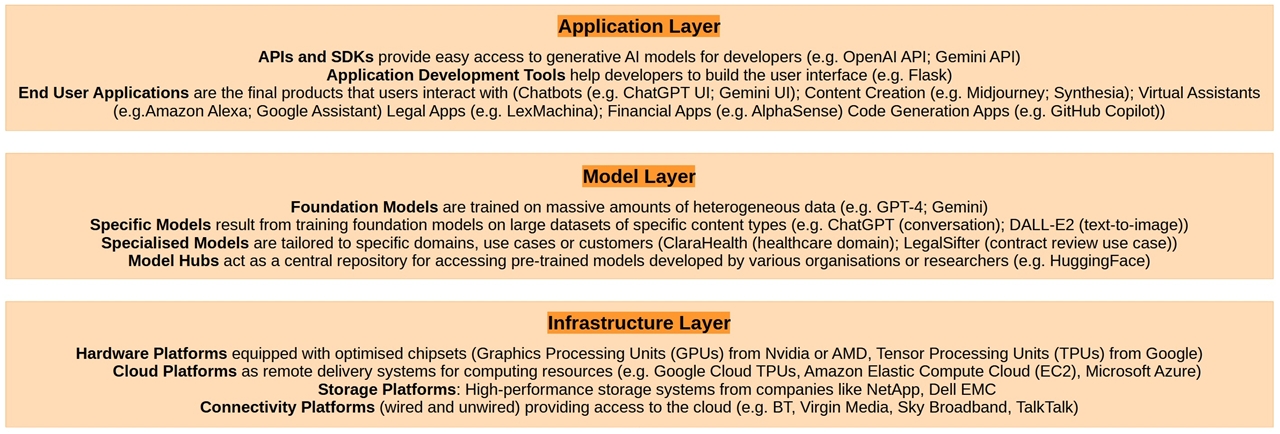

| 5. Functionality & Additional Features |